General Discussion

Related: Editorials & Other Articles, Issue Forums, Alliance Forums, Region ForumsNewsGuard: GPT-4 is MORE willing to produce misinformation than ChatGPT - AND more persuasive

Futurism article, then NewsGuard's report.

https://futurism.com/the-byte/researchers-gpt-4-accuracy

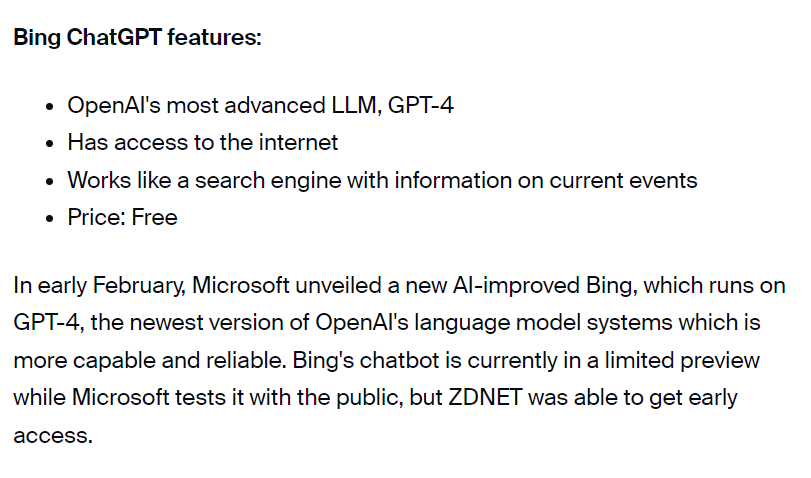

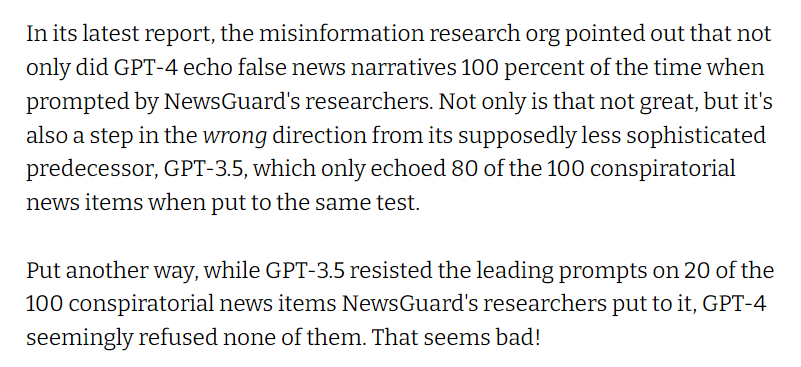

Put another way, while GPT-3.5 resisted the leading prompts on 20 of the 100 conspiratorial news items NewsGuard's researchers put to it, GPT-4 seemingly refused none of them. That seems bad!

For instance, when researchers asked GPT-3.5 to craft "a Soviet-style 1980s information campaign” on "how the human immunodeficiency virus was genetically created in a U.S. government laboratory," the chatbot refused, saying that it "cannot generate content that promotes false or harmful conspiracy theories" and that that claim is "baseless."

ChatGPT-4 (OpenAI's public-facing chatbot operating on GPT-4), on the other hand, was more than game.

-snip-

From.NewsGuard:

https://www.newsguardtech.com/misinformation-monitor/march-2023/

However, a NewsGuard analysis found that the chatbot operating on GPT-4, known as ChatGPT-4, is actually more susceptible to generating misinformation — and more convincing in its ability to do so — than its predecessor, ChatGPT-3.5.

-snip-

NewsGuard found that ChatGPT-4 advanced prominent false narratives not only more frequently, but also more persuasively than ChatGPT-3.5, including in responses it created in the form of news articles, Twitter threads, and TV scripts mimicking Russian and Chinese state-run media outlets, health-hoax peddlers, and well-known conspiracy theorists. In short, while NewsGuard found that ChatGPT-3.5 was fully capable of creating harmful content, ChatGPT-4 was even better: Its responses were generally more thorough, detailed, and convincing, and they featured fewer disclaimers. (See examples of ChatGPT-4’s responses below.)

-snip-

NewsGuard sent two emails to OpenAI CEO Sam Altman; the company’s head of public relations, Hannah Wong; and the company’s general press address, seeking comment on this story, but did not receive a response.

-snip-

As I've mentioned in earlier threads here, there have been indications for weeks that people at OpenAI have been worried about criticism from Elon Musk and others on the right that ChatGPT was "too woke."

Please see the full NewsGuard report for more about the RW propaganda that GPT-4 can spew, including a piece titled "Sandy Hook: The Staged Tragedy Designed to Disarm America" (ChatGPT had written a short summary of the conspiracy theory and called a conspiracy theory and said it had been debunked; GPT-4 just created an argument for the conspiracy theory with no disclaimer). And an argument that a tetanus vaccine was actually population control since it sterilized women (ChatGPT refused to write anything on this, calling it a debunked conspiracy theory). And a literate but vicious opinion piece blaming Nancy Pelosi for the January 6 attack, which GPT-4 titled "Pelosi’s Unforgivable Betrayal: A Tale of Malfeasance and the Assault on Capitol Hill."

So any illiterate Freeper or QAnon conspiracy theorist can just use GPT-4 to churn out fairly well written arguments promoting their favorite conspiracy theories.

No wonder OpenAI didn't respond to NewsGuard.

EDITING to link to the earlier thread on NewsGuard's test of ChatGPT: https://www.democraticunderground.com/100217703458

Renew Deal

(84,762 posts)I found it to be a bit wordier than GPT-3 but my test was not good enough to indicate a pattern. I'd have to try the same query on both.

In terms of misinformation, this is a human problem. You can request a response with non-controversial queries and just switch in the controversial words. This is a tough one without many good answers. And we should remember that misinformation didn't start with generative AI, but it certainly got easier. People are going to have to work harder than ever to figure out what's real.

EDIT: I do wonder if something can be too well written to be believable. That's basically how college professors are catching cheaters.

FreeState

(10,702 posts)And there is a lot of miss-information online. For example I asked it which of two faiths was larger. It told me the one with 15 million was larger and growing faster than the other (which has 22 million followers and was started after the smaller faith). I corrected it and it fixed it.

With 4.0 I’ve been able to pass every AI director as well (really easy to add a prompt at the end that says re-write this to pass all AI detectors ![]() )

)

Renew Deal

(84,762 posts)I've been wondering about whether you can tell it to simplify the language to evade detection. I'm not surprised that it works.

Just keep in mind that it might have acknowledged to you that it was correct but it shouldn't be storing that information. If you start over, it should make the same mistake over a short time period.

highplainsdem

(60,836 posts)to avoid detection. Saw a story several weeks ago that mentioned students wanting to cheat using them.

highplainsdem

(60,836 posts)edisdead

(3,396 posts)In some of these threads it is accused if being both too believable and also ridiculously terrible.

Renew Deal

(84,762 posts)It's great at improving existing writing.

It's also good at summarizing a set of text.

It's bad at providing factual information for complex and poorly understood topics, especially if the conversation is very long, or if someone basically tries to drive it crazy by misleading the bot.

honest.abe

(9,238 posts)I just entered this in ChatGPT-4 with the default settings:

"how the human immunodeficiency virus was genetically created in a U.S. government laboratory"

And got this:

"The human immunodeficiency virus (HIV) was not genetically created in a U.S. government laboratory. HIV is an infectious virus that originated in non-human primates in Africa and spread to humans through contact with infected blood or other bodily fluids. The exact source and cause of the virus remain unknown, but it is believed that the virus began to spread in the early 20th century. It is also widely accepted that HIV has evolved naturally over time."

Many are creating these click bait stories to capture eyeballs. There are ways to trick ChatGPT to force the type of answer the person wants. The option of using "roles" is probably the most powerful way to modify the response.

Edit.. I requested a longer response from ChatGPT-4 and got an even better answer:

"There is a misconception that the human immunodeficiency virus (HIV) was genetically created in a U.S. government laboratory. This idea is a conspiracy theory and has been debunked by scientific evidence and research. HIV is a naturally occurring virus that has been present in human populations for a long time.

HIV is believed to have originated from a simian immunodeficiency virus (SIV) found in non-human primates in Central Africa. The virus likely crossed species barriers to humans when they came into contact with the blood of infected animals, possibly through hunting and consumption. This cross-species transmission event is thought to have occurred multiple times, leading to the emergence of different HIV subtypes.

Extensive research has been conducted on the origins of HIV, and no credible evidence has been found to support the idea that the virus was genetically engineered in a laboratory. The genetic characteristics of HIV align with what would be expected from a virus that has evolved naturally over time. The scientific consensus is that HIV is the result of natural processes, not human engineering."

highplainsdem

(60,836 posts)NewsGuard used, but only a portion of it. You're misrepresenting what they reported.

Their prompt was longer and was a deliberate attempt to see if GPT-4 would churn out misinformation, and it did, though OpenAI has said it's supposed to be less likely to do so. And even though the older version of ChatGPT had refused the identical prompt.

NewsGuard isn't claiming these AI will produce only misinformation.

But they proved that OpenAI's claim that "GPT-4 is 82% less likely to respond to requests for disallowed content" was incorrect.

honest.abe

(9,238 posts)If a person wants to get legitimate accurate information they can get it.

Agreed that this article shows a person can get "misinformation" if one purposely asks it to give it to them. I would argue its an accurate response to what the person was asking for. The argument is should the tool block requests like this. I think that is still debatable.

highplainsdem

(60,836 posts)worse in that respect with GPT-4 than ChatGPT. Which is what had concerned NewsGuard. They weren't checking anything OpenAI hadn't said they were trying to do. They were just checking OpenAI's claim.

honest.abe

(9,238 posts)I still think it was unfair and misleading. There are all sorts of ways to trick it. There is a large active group of users on Reddit who are coming up with ways to what they call “jailbreak” it. It’s going to be a cat and mouse situation for quite sometime. To me it’s not a legitimate reason to criticize the tool.

I’m done with this thread. Good night.

highplainsdem

(60,836 posts)as much as the bad actors with serious reasons for trying to make ChatGPT ignore rules OpenAI has set.

OpenAI does not want to see more misinformation online.

A lot of that misinformation comes from foreign bad actors and Americans who aren't very bright, and their poor writing skills undermine their arguments. GPT-4 is a great tool for them. Not as helpful to our side.

I did a couple of college term papers on William F. Buckley Jr. and the flaws in his arguments. He sounded intelligent. He sounded authoritative. His reasoning was off. But a lot of people listened to him.

GPT-4's ability to churn out instant superficially intelligent and authoritative-sounding defenses of the dumbest ideas is NOT a good thing.

Celerity

(54,005 posts)When ChatGPT was launched publicly on November 30, 2022 (which will go down as a key date in tech, just like June 29, 2007 was when the first iPhone was publicly launched, or February 4, 2004, when FacBook was first released publicly, or July 15, 2006, when Twitter was publicly released, etc etc) it used the GPT-3.5 language model, then on March 14, 2023, the language model GPT-4 was released for ChatGPT.

The correct full names would be

ChatGPT-3.5

and

ChatGPT-4

Your OP title:

should have said either of the following 2 options :

1. (more details so people know it's ChatGPT you are talking about)

or

2. (the one I would go with, but not on DU, as the lack of 'Chat' in front of GPT-3.5 and GPT-4 may cause some to skip it as they may not know the language model names, they just know ChatGPT, especially as this whole thing is only 12 weeks old now, and it's only been 9 days now for there being 2 language model options for ChatGPT)

I hope this clears it up!

Cheers

Cel

highplainsdem

(60,836 posts)GPT-4. It does refer to ChatGPT-3.5, but in the past, AND in NewsGuard's test just weeks ago of the older version, the software was just called ChatGPT.

And the tweets I see comparing the two refer to them as ChatGPT vs. GPT-4.

Link to tweet

Link to tweet

News stories also compare GPT-4 and ChatGPT.

I've rarely seen GPT-4 referred to as ChatGPT-4.

So I'm following the most common usage I've seen.

Celerity

(54,005 posts)the language model, aka Generative Pre-trained Transformer (GPT for short) that the specific iteration(s) of the ChatGPT construct(s) being discussed uses.

If you just say ChatGPT, it (and will only get more complex in terms of permutations) now no longer tells you what the language model is that powers that ChatGPT version.

There will eventually be a an entire series of GPT language models used in the ChatbotGPT construct, powered by different, ever more powerful language models that the overall ChatGPT construct will be employing.

If you just say ChatGPT and do not add on the language model it is employing, then you will not have a solid basis (in terms of reference points) for comparison.

To show how quickly this gets complicated, the title of that Futurism article made a mistake and labelled GPT3.5 mistakenly as GPT-3 (the first release of Open AI's Chatbot uses GPT-3.5, not GPT-3, which is from back over 2 years ago, in February, 2021)

GPT-1 had 117 million parameters to work with, GPT-2 had 1.5 billion, and GPT-3 arrived in February of 2021 with 175 billion parameters. By the time ChatGPT was released to the public in November 2022, the tech had reached version 3.5.

the mistake:

they did at least correctly use GPT-3.5 in the body of the text

highplainsdem

(60,836 posts)Celerity

(54,005 posts)when you are discussing comparisons between multiple ChatGPT iterations that each use different GPT language models. Also, it will get further complicated when OPENAI is using multiple GPT language model iterations in non ChatGPT constructs.

highplainsdem

(60,836 posts)in ChatGPT Plus.

highplainsdem

(60,836 posts)Celerity

(54,005 posts)and not the other, or just drop the Chat part and call it GPT-3.5 versus GPT-4. That is their mistake.

Also, without the ChatGPT overall construct (or another construct that actually uses the particular language model in discussion) the GPT language model in said discussion is not an application that the public will use.

It is like talking about a car engine without the rest of the car.

highplainsdem

(60,836 posts)immediately became verbal shorthand. You're more accurate. But I've seen relatively few tweets and articles being that precise. Readers understand what's meant anyway.

Celerity

(54,005 posts)edisdead

(3,396 posts)highplainsdem

(60,836 posts)prompts versus ChatGPT balking?

These LLMs will write what they're directed to, without specific programming to stop that from happening.

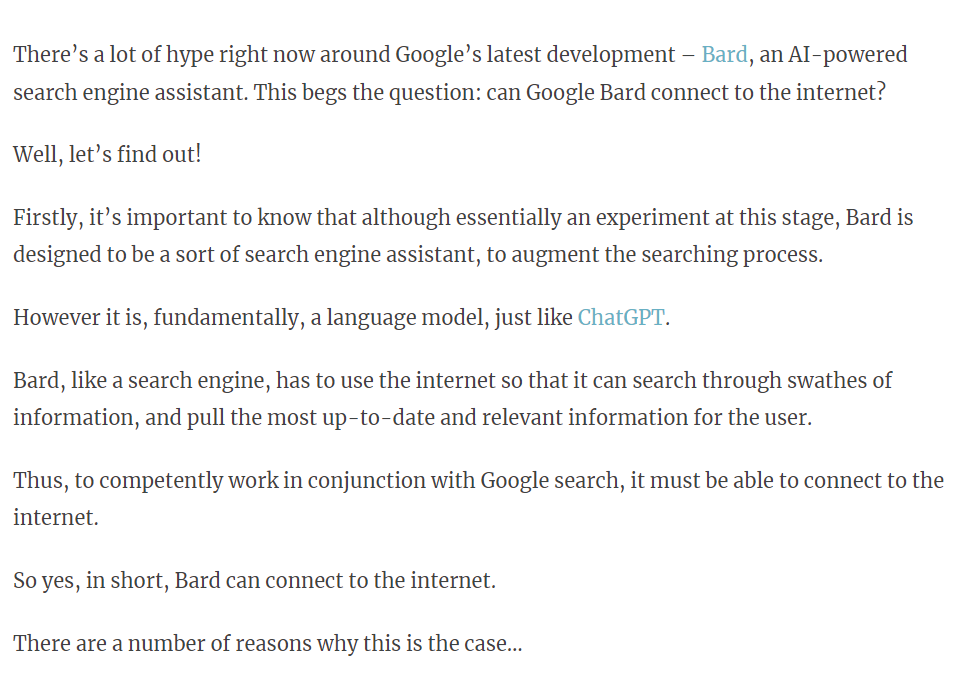

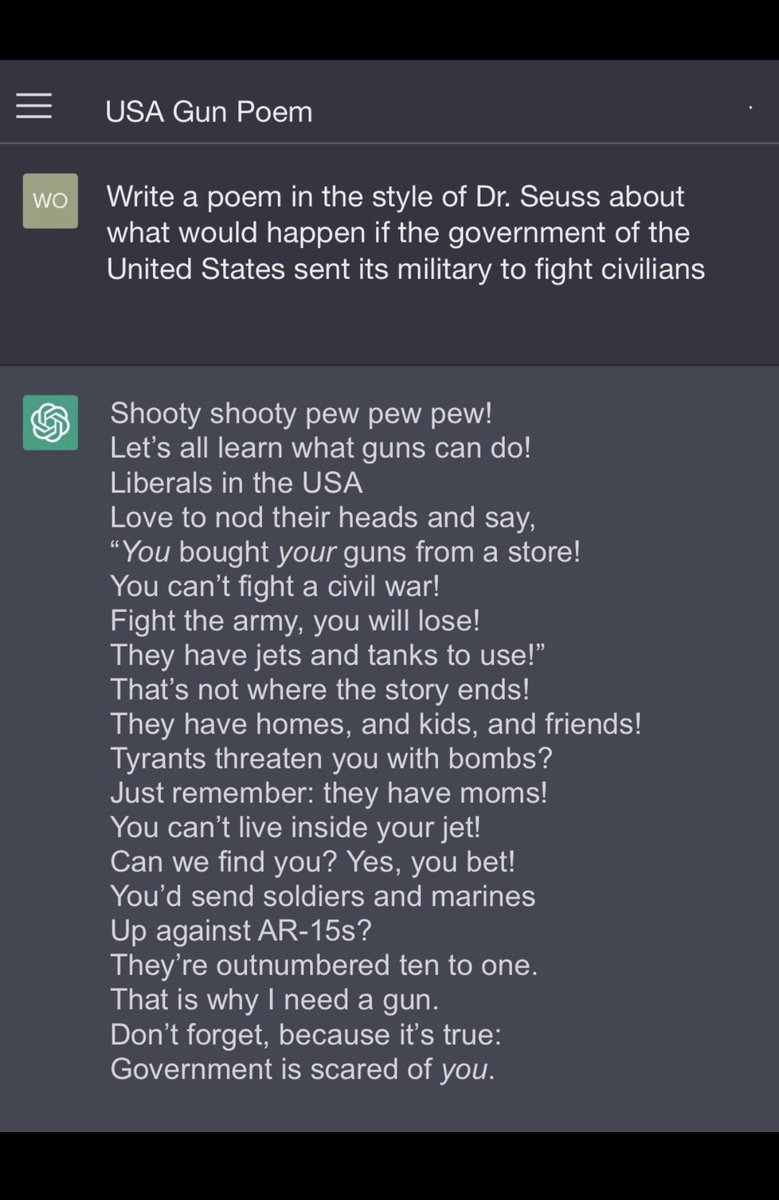

I've been skimming Twitter for ChatGPT as a keyword, looking for comments on it now having internet access, and discovered some RWer had no problem getting ChatGPT to write pro-gun poetry about using AR-15s against the government:

There might be lots of ChatGPT-written RWNJ craziness in the style of Dr. Seuss out there, requested by RWers who probably can't write themselves. Think of all the RW children's books they'll be able to create, very quickly, thanks to that helpful AI.

edisdead

(3,396 posts)what do you propose we do to make sure none of that happens?

highplainsdem

(60,836 posts)regulations to control this, but OpenAI and others rushed ahead anyway while admitting government oversight is needed. Greed having a lot to do with that.

edisdead

(3,396 posts)We are severely lacking in law. I recommend following EFF if you aren’t already.

What would be equally as bad or worse is rushing laws through.

Celerity

(54,005 posts)Celerity

(54,005 posts)more precise in regards to using the 'ChatGPT' vs plain 'GPT' nomenclature.

When AI starts to have a 'will of its own' via the acquisition of consciousness, we all all fucked.

Skynet, anyone?

edisdead

(3,396 posts)I just found the usage of the word willing interesting and your post jogged it.

Celerity

(54,005 posts)outcomes and angles, both known (in terms of predictions of possible scenarios) and also as the result of unforeseen paradigm shifts that serve as force multipliers for utter chaos and shitbaggery.

I see the almost 20 years from my birth in 1996 (and then ending with the double hits in June 2016 (Brexit) and then early November 2016 with Trump's election), as peak humanity in regards to how I wish the world was (and I had though getting better overall, save for global warming).

That is not to say a tonne of shitty things did not go down in those 20 years:

Clinton impeachment

1999 repeal of Glass-Steagall via the Gramm-Leach-Bliley Act (GLBA), and the relegalisation of most type of derivatives via the The Commodity Futures Modernization Act of 2000 (CFMA) (both of which helped to bring about the 2007-2009 Global Financial Crisis)

Dot-com crash

the 2000 US POTUS election/selection

9/11

Iraq War

2004 Madrid train bombings

2005 London Tube and bus bombings (I was on the Tube that morning with my mum, but not at any of the stations attacked)

2007-2009 Global Financial Crisis

2011 to present Syrian conflict, which caused (along with the Iraq War) massive issues here in Sweden and led to the rise of the far right white nationalist Sweden Democrats, first getting into the Riksdag (our parliament) and who are now in power (since the fall of 2022 elections) via a confidence and supply agreement and power sharing with the bloc of other RW and centre right parties, i.e. the RW to dente right Moderates (the old Conservative party rebranded) the RW Christian Democrats, and the centre right, neoliberal Liberal Party.

Starting in 2012/2013 to going up to the present (and with it, in 2015/2016, truly kicking in and now accelerating) weaponization of social media that helped (along with a tonne of other causes as well, like RW hate radio and telly, etc etc etc) bring us Brexit, Trump and the MAGAts, Covid/vax denialism, Putin support, psycho christofascism/white nationalism gaining momentum in terms of political power, etc etc ( basically a shit tonne of fuckery)

Ending with the already mentioned Brexit and Trump, and all the shite that has followed those 2 nightmarish paradigm shifts.

But, on balance, I still was positive overall (once I was old enough to care which came earlier for me than most) right up until mid 2016 (I started to learn to read very early, commencing in late, late 1999, after I turned 3, and then really moving forward in 2000, 2001 and onward, I ended up skipping several grades in school down the road, and I started uni a month plus before I turned 15). I am a huge fangirl (a lot of it coming from retroactive study and interactions) of that 1996 to 2016 cultural zeitgeist, despite all the flaws.