NNadir

NNadir's JournalCAISO: Heat Wave in CA, So Called "Renewable Electricity" Matched Gas Electricity for 10 Minutes.

This is a follow up of my thread of yesterday, about the extreme heat being experienced in California and how so called "renewable energy" is faring on addressing the electricity demand associated with the need for cooling.

That thread is here: Looking at CAISO demand and supply of electricity during extreme California temperatures.

Yesterday I focused on the weather in San Bernadino, where the high temperature yesterday was 102°F (38°C) and mentioned Indio, California, one of California's fastest growing cities, albeit with a relatively small population for California of around 80,000. Today in San Bernardino, the predicted high will be again 102°F (38°C) at 3 pm. Indio is cooling down compared to yesterday, where temperatures of over 120°F (49°C). Today's high will "only" be 118°F (48°C).

Humans cannot survive temperatures much higher than 120°F (49°C) without drinking copious amounts of water. A human trial with 10 young healthy male volunteers, evaluated strategies for survival at lower temperatures (43.0 ± 0.5 °C) than we're seeing in Indio, in a 90 min trial, in the absence of air conditioning:

Intermittent wetting clothing as a cooling strategy for body heat strain alleviation of vulnerable populations during a severe heatwave incident (Song, Wang, Zhang, Journal of Thermal Biology 79 (2019) 33–41).

The subjects, all men in their early 20s dressed in light clothing, all lost about 120 - 130 grams of water to evaporation and produced about 350 grams of sweat in this period.

The introduction to that paper had some fun text about the death toll associated with heat waves, albeit assuredly not in any way a comprehensive accounting:

In extremely hot environments (Tair? 40 °C), people like the poor and the homeless in backward areas do not have a chance to access air-conditioning. Hence, they have a high risk of suffering heat stress during prolonged heatwave incidents. In fact, statistical data showed that those populations account for a large proportion of heat-induced death tolls (Åström et al., 2011, Gronlund, 2014, Gubernot et al., 2014). Besides, extreme heatwaves put strains on the electrical power grid and cause power outages in some regions which renders electrically powered cooling devices (e.g., air-conditioning, electric fans and water pumps) useless...

Power outages in these conditions can kill a person.

Of course, people don't often discuss the death toll associated with heat waves. Most people, in my experience, would rather talk about the 2011 Earthquake and Tsunami in Japan in which 20,000 people died from seawater, although the deaths from seawater are in no way as interesting as the possibility that someone some day somewhere may die from radiation that leaked from nuclear reactors destroyed by the Tsunami.

I'm frequently told that nuclear power is "too dangerous," by people who apparently believe that climate change is not "too dangerous." By contrast, I've been hearing for most of my adult life - I'm decidedly not young - that so called "renewable energy" will save the day. It hasn't saved the day and it isn't saving the day, but in these times, we like to substitute faith for facts, and who am I to argue with lies in the age of popular lies, where the lies we tell others and the lies we tell ourselves are celebrated?

California is often presented as a so called "renewable energy" paradise.

We are nearing the summer solstice, and of course, California is a putative solar energy nirvana in particular.

Real time data is available at the CAISO website: CAISO Website.

Since I check this website frequently, and have been doing so as we approach the solstice, we can expect that so called "renewable energy" will be dominated by solar production in the early afternoon. As of this writing, the current peak solar production in the entire State of California is, as of 12:10, PDT, 11,382 MW, the high, so far for the day. In the whole state, wind power is producing a total of 710 MW. The predicted peak power demand at the CAISO site for 6/17/21 is at 18:10 PDT, (6:10 PM) will be 43,048 MW, as the sun is going down, and with it, solar power production. The current demand for energy 12:20 PDT, is 35,599 MW.

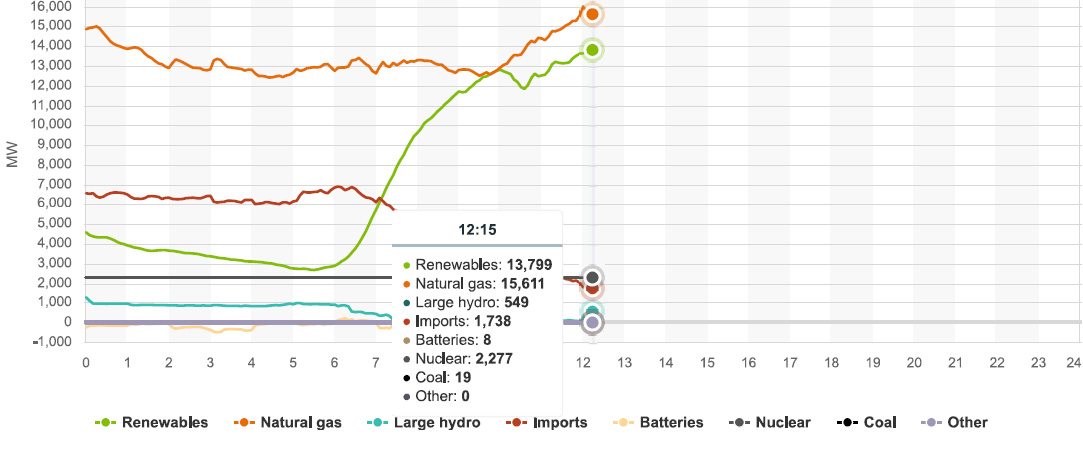

People like to cheer for what so called "renewable energy" does at peaks. At no point yesterday, did all the renewable energy facilities in the entire State of California, ever, for even for a few minutes, match the power produced by burning dangerous natural gas, and dumping the dangerous fossil fuel waste carbon dioxide directly into the planetary atmosphere.

Today however, for a period of about 15 minutes, so called "renewable energy" matched the output of the dangerous natural gas plants in the state in a period between 9:45 and 9:55; at 9:55, all the so called "renewable energy" in the State of California was producing 12,756 MW, exactly equal to what dangerous natural gas was producing.

I'll pause for cheers...

After half a century of wild cheering for so called "renewable energy," it is still - I contend always will be - dependent on access to dangerous natural gas. We. Couldn't. Care. Less.

There is one nuclear plant left in California, Diablo Canyon (2 reactors). It is producing about 2,278 MW of electricity in two small buildings, more than all the wind turbines in California. The reactor came on line 36 years ago, and is functioning fine. It's reliable and predictable. No one has been killed by pollution produced by the Diablo Canyon Nuclear Plant. I contend that the used nuclear fuel stored there, all of it on site, will be a valuable resource for future generations that will be less stupid than mine has been.

Unfortunately this nuclear plant is about to close because of appeals to ignorance. That will raise the dependency of gas on California. No replacement of this valuable resource, which is at this exact point, producing more energy than all the wind turbines in the entire state, this without turning vast tracts of wilderness into industrial parks, is planned.

The nuclear plant will be replaced by dangerous natural gas. There will be lots of outright lies told to the contrary, but the plant will be replaced by dangerous natural gas.

Dangerous natural gas is not clean; it is not safe, and it releases significant amounts of the important dangerous fossil fuel waste carbon dioxide, and leaks for the transport and use of dangerous natural gas releases the second most important climate forcing gas, methane.

Graphics from the CAISO website for power production in California as of 12:15 PDT, 06/17/21:

There is a serious risk of California, particularly Southern California, becoming uninhabitable, particularly with respect to the effect of climate change, to which dangerous natural gas is a contributor, on water supplies. You may think I'm being extreme here, but I don't think so.

We're kidding ourselves if we think we're doing anything to address climate change.

History will not forgive us; nor should it.

Looking at CAISO demand and supply of electricity during extreme California temperatures.

Temperatures in parts of California today will exceed 105°F (40°C) and in places, Indio for example, will reach around 120°F (49°C). Air conditioning will be cranking up for sure, and will be working at lower efficiency.

We are nearing the summer solstice, and of course, California is a putative solar energy nirvana.

Real time data is available at the CAISO website: CAISO Website.

A short while ago, I downloaded some graphics.

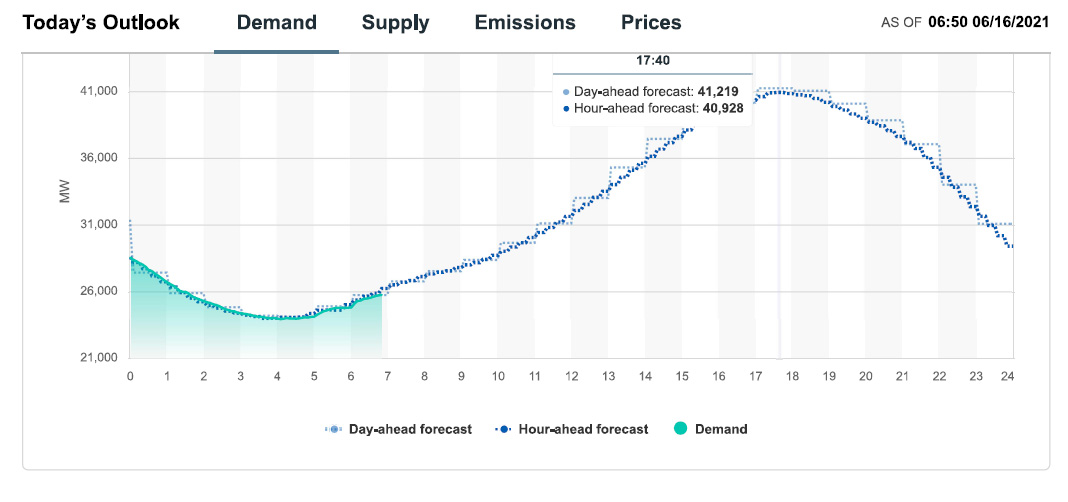

Demand and demand forecast for 06/16/21:

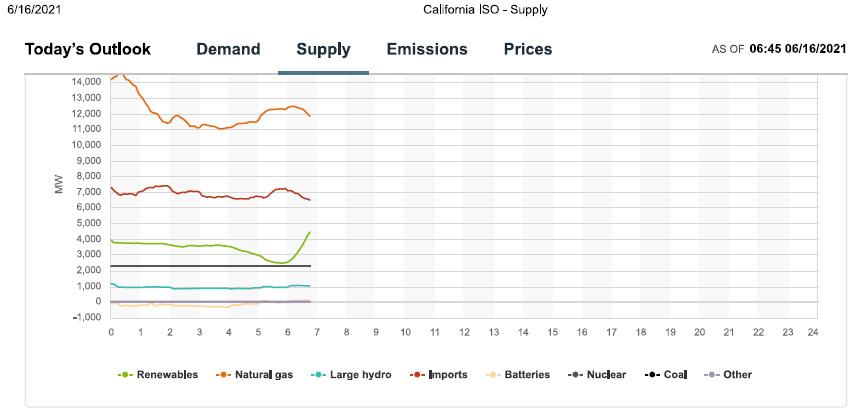

Overall Energy Supply

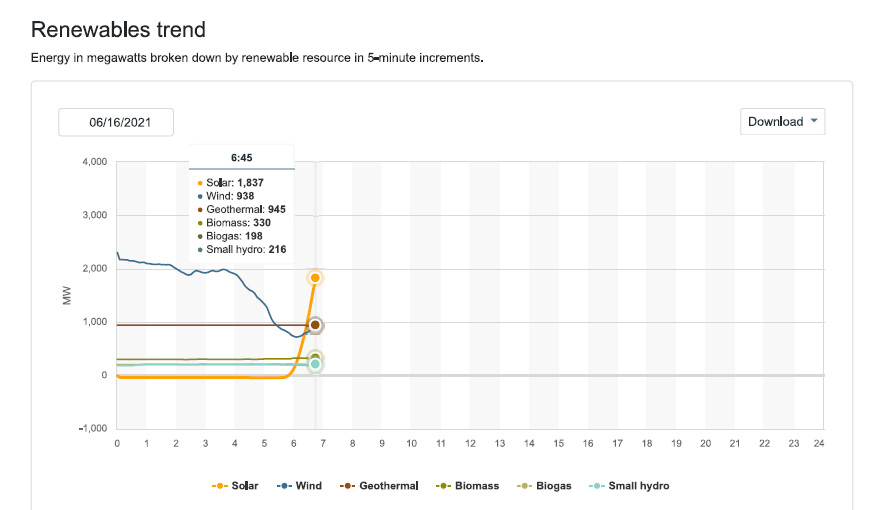

Since I check this website frequently, and have been doing so as we approach the solstice, we can expect that so called "renewable energy" will be dominated by solar production in the early afternoon, which should approach peak power of 13,000 MW.

Impressive, no?

Well, demand will peak as the sun falls, so there's that.

Not much wind is blowing this morning. Maybe it will change, who knows. When and if is not known.

Gas was dominating when I downloaded the graphics, and it will dominate near the peak.

Some people, me for instance, believe the regular occurrence of these kind of high temperature weather events is connected with climate change.

After half a century of wild cheering for so called "renewable energy," it is still - I contend always will be - dependent on dangerous natural gas.

There is one nuclear plant left in California, Diablo Canyon (2 reactors). It is producing about 2,278 MW of electricity in two small buildings, more than all the wind turbines in California. The reactor came on line 36 years ago, and is functioning fine, but well, it's being closed and no replacements are planned in California. (The production of electricity at this plant may fall slightly as the temperature rises in the afternoon, because of changes in thermodynamic efficiency connected with high temperatures, but certainly output will not fall below 2,200 MW). It's reliable and predictable.

Unfortunately this nuclear plant is about to close because of appeals to ignorance. That will raise the dependency of gas on California.

Dangerous natural gas is not clean; it is not safe, and it releases significant amounts of the important dangerous fossil fuel waste carbon dioxide, and leaks for the transport and use of dangerous natural gas releases the second most important climate forcing gas, methane.

There is a serious risk of California, particularly Southern California, becoming uninhabitable. You may think I'm being extreme here, but I don't think so.

We're kidding ourselves if we think we're doing anything to address climate change.

Indio California to see 122 degrees F on Friday.

Nice Breeze though. The all time highest temperature ever recording was 123°F

From Weather.com:

Fri 18 | Day

122°F

W 13 mph

Mostly sunny skies. Near record high temperatures. High 122F. Winds W at 10 to 20 mph.

Humidity

11%

UV Index

10 of 10

Sunrise

5:34 am

Sunset

7:58 pm

Fri 18 | Night

89°

NW 14 mph

Clear. Low 89F. Winds NW at 10 to 20 mph.

Humidity

19%

UV Index

0 of 10

Moonrise

1:23 pm

Waxing Gibbous

Moonset

1:16 am

Just checked the weather forecast for Indio California. 119F, 5 pm Tuesday.

I wonder how the date trees will fare.

828 Underground Nuclear Tests, Plutonium Migration in Nevada, Dunning, Kruger, Strawmen, and Tunnels

This is a very long post, probably not one worth reading, but it was fun to write, as in writing it, I reviewed some familiar concepts deepening my understanding and, as always, learned many new things as well, and came to some new ideas. As such, for what it's worth, I've decided to post it, as desultory and as sloppy as it is.

One of the papers I'll discuss in this post is this one: Plutonium Desorption from Nuclear Melt Glass-Derived Colloids and Implications for Migration at the Nevada National Security Site, USA (Claudia Joseph, Enrica Balboni, Teresa Baumer, Kerri Treinen, Annie B. Kersting, and Mavrik Zavarin Environmental Science & Technology 2019 53 (21), 12238-12246)

“Nevada National Security Site” is a marketing euphemistic rebranding of the nuclear weapons testing area previously known as the Nevada Test Site (NTS), and before that as the Nevada Proving Grounds. Of course the rebranding is a little absurd, since the reason for testing so many nuclear weapons there was insecurity, not security, deriving the fear that the former Soviets would have more and better nuclear weapons than “we” do, so an improved name for the site would be “Nevada National Insecurity Site.”

Anyway.

From the introductory text from the paper cited at the outset:

At the NNSS, the majority of the nuclear tests were conducted underground in silicic volcanic rocks of rhyolitic composition (75% SiO2 and 15% Al2O3), with approximately 30% conducted below the water table.(1) The high temperatures and pressures achieved during an underground nuclear explosion vaporize and melt the surrounding rock.(12) During this process, the overwhelming majority of the refractory radionuclides, including Pu, are incorporated into the melted rock,(13,14) also referred to as nuclear melt glass, which pools at the bottom of the test cavity.(12,15) The initial groundwater temperatures and temperature histories in test cavities vary substantially. The Cambric nuclear test likely returned to ambient temperatures within 10 years,(16) while other tests, such as Almendro, recorded a downhole temperature of 157 °C, 23 years after detonation.(17) Plutonium, as well as other radionuclides (e.g., 137Cs), will be released from the nuclear melt glass by dissolution during contact with groundwater.(18) Previous studies(19,20) have shown that hydrothermal alteration of rhyolitic glasses of similar composition to those found at the NNSS leads to the formation of clay (e.g., smectite/montmorillonite) and zeolite (e.g., clinoptilolite-heulandite, analcime)(4,21) secondary minerals, a fraction of which may be found in the form of colloids. Colloids, defined as particles ranging in size from 1 to 1000 nm, have low settling velocities and can remain suspended in solution for long periods of time.(22,23) At the NNSS, the presence of fractured volcanic rock, high flow rates, and low-ionic-strength groundwater enhance colloid stability and potential migration.(4,8,24,25) Although colloid-facilitated transport has been recognized as the primary cause of Pu downgradient migration at this site,(4) the stability of Pu on these colloids has yet to be determined, limiting the conceptual understanding of the long-term migration potential of Pu at the NNSS.

It is interesting to note the energy value of this 2.8 metric tons of plutonium is, were it subjected to fission in a nuclear reactor.

It can be shown, by appeal to databases, for example at the Brookhaven National Laboratory, that the fission of a single atom of plutonium-239 yields about 199 MeV of energy, ignoring neutrinos which represent energy which is not recoverable. With some simple calculations, this translates to about 80.3 trillion joules per kg of plutonium. Thus the energy associated with the plutonium melted into glass at the Nevada National (In)security Site is about 0.22 exajoules of energy, or about, roughly 0.2% of all the energy consumed in the United States each year, that being roughly 107 exajoules per year, last time I looked.

In other terms, familiar to old people like me, it is equivalent to about 37 million barrels of oil, or about 1.9 billion gallons. (For comparison, the Exxon Valdez oil spill which destroyed for a time, much of the ecosystem of Prince William Sound, spilled about 260,000 barrels of oil.) The Deepwater Horizon Oil Spill in 2010 amounted to 4.9 million barrels of crude oil. I use these oil/gasoline equivalents to make clear how this might look as an uncontrolled oil or gasoline spill. Of course, more or less, this plutonium is an uncontrolled, albeit deliberate release into the subterranean environment. Thus it is the same situation with crude oil until it is deliberately brought to the surface, where it is literally choking the planetary atmosphere, and for that matter the hydrosphere, to death. Napalm, is of course, gasoline gelled with the addition palmitic acid which is sometimes transformed into the "renewable" diesel fuel biodiesel. As napalm is a weapon of mass destruction which has easily killed more people than Hiroshima and Nakasaki did in the only nuclear war ever observed, it is clear that crude oil and its refined product, gasoline, represent a proliferation risk. Thus the Nevada National (In)security site, might be matched if the US Airforce has bombed the Nellis Airforce Base with so much Napalm as to leave behind 37 million barrels of gasoline unconsumed. When prepared for commercial processes, in dangerous fossil fuel accidents, (that vanish quickly down the memory hole) crude oil and gasoline, of course, spread rapidly when released into the environment, but the question in this post is "how rapidly does plutonium spread?"

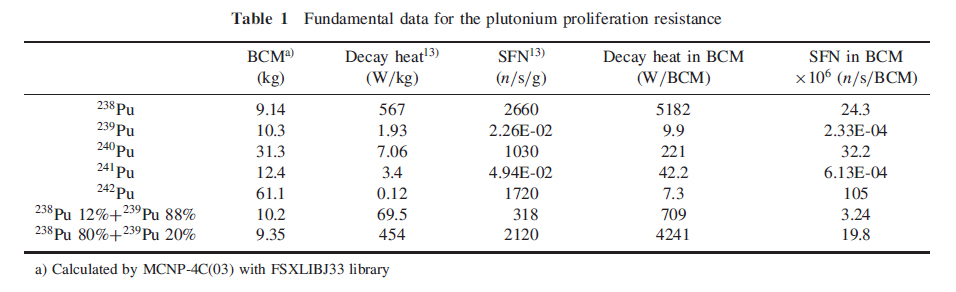

Some time ago, I indicated in this space what the bare sphere critical mass of various plutonium isotopes is: Bare Metal Critical Masses of Commonly Available Plutonium Isotopes. (I just edited it to restore broken graphics links.)

Here, for convenience one of the two tables from the paper discussed in that post:

In this table, BCM refers to "Bare (Metal) Critical Mass" and SFN refers to "Spontaneous Fission Neutrons."

Around two billion years ago, because uranium-235 - which has a shorter half life than the dominant uranium isotope, U238 - represented a larger fraction of the mass of uranium ores. In fact, all of the uranium ores on Earth at that time were "SEU," slightly enriched uranium, which is used in many modern nuclear reactors, where only a slight enrichment in necessary in the presence of a moderator, with rare exceptions water, or heavy water, for a reactor to go critical. The evolution of oxygen in the Earth's atmosphere allowed uranium to flow and precipitate in various systems so that at a place called "Oklo" in modern day Gabon, natural nuclear reactors went critical, operating in a cyclical fashion for a few hundred thousand of years. Here is just one of many papers discussing this interesting event:

Fission product retention in the Oklo natural fission reactors (David Curtis, Timothy Benjamin, Alexander Gancarz, Robert Loss, Kevin Rosman, John DeLaeter, James E. Delmore, William J. Maeck, Applied Geochemistry, Volume 4, Issue 1, 1989, Pages 49-62)

Although critical masses are very different for oxides than they are for metals, and very different in the presence or absence of water - the cyclical nature of the Oklo reactors was tied to the fact that the water that moderated neutrons and started the reactors boiled, shutting them down until they cooled enough to resume criticality - one may wonder, naively at least, whether it is possible for similar reactors to form at the Nevada National (In)security Site. This, in turn, will depend on the ability of plutonium to migrate as uranium did billions of years ago.

Don't worry; be happy. (I think it very, very, very, very unlikely.)

In situ plutonium was generated at the natural Oklo reactors just as it is in modern anthropogenic nuclear reactors, whether the reactors are constructed for warlike purposes (where the plutonium production is for the more environmentally impactful weapons grade material which must be synthesized in low concentrations in the fuel) or for saving lives from air pollution in commercial reactors. At Oklo, the evidence is that the plutonium was retained in apatite, a mineral also found in synthetic bone and as a result did not migrate very far: Isotopic evidence for trapped fissiogenic REE and nucleogenic Pu in apatite and Pb evolution at the Oklo natural reactor (Kenji Horie, Hiroshi Hidaka, François Gauthier-Lafaye Geochimica et Cosmochimica Acta, Volume 68, Issue 1, 2004, Pages 115-125).

A fairly sophisticated reactor physics analysis of the Oklo natural reactors may be found here: Criticality of the reaction zone 9 of Oklo reactors revisited (K. Mohamed Cherif, A. Seghour, F.Z. Dehimi, Applied Radiation and Isotopes, Volume 149, 2019, )Pages 165-173)

A similar discussion may be found here: R.T. Ibekwe, C.M. Cooling, A.J. Trainer, M.D. Eaton, Modeling the short-term and long-term behaviour of the Oklo natural nuclear reactor phenomenon, Progress in Nuclear Energy, Volume 118, 2020.

Weapons grade plutonium is mostly 239Pu, and generally has less than 5% 240Pu as I understand it, but during a nuclear explosion, in which a very high neutron flux is observed, because not every plutonium atom fissions when struck by a neutron - some are absorbed according to an important parameter in nuclear engineering, the capture to fission ratio – some of the 239Pu in a nuclear explosion is converted into 240Pu, which is more radioactive than 239Pu because it has a shorter half-life.

Many discussions of radioactivity use the unit "Bequerel," named after the discoverer of radioactivity, Henri Bequerel, which is often abbreviated Bq (and, more rarely, "Beq" ) ; the Bequerel represents the decay of a single atom. The issue of single atom decays in this post will come up later, when I turn to the Dunning Kruger effect and Strawmen listed in the title of this post. Sometimes one will see a fractional "Bequerel" which should be interpreted by taking the inverse of the number and finding the average number of seconds required before a decay is observed. Another unit of radioactivity is the "Curie," usually abbrevieated "Ci." Historically it was taken as the number of decays observed per second in 1 gram of radium. It has been updated to mean 3.7 X 10^(10) Beq, 37 billion decays per second, exactly.

Thus knowing the mass of plutonium in some setting is not sufficient to describe how radioactive it is. For example, here is part of a graphic (part b) from a 1999 paper on the topic of plutonium migration where one of the authors Anie B. Kersting, who is an authority on Plutonium migration and who definitely knows more about the topic than say, Bonnie Raitt (see below):

The caption:

Source: Kersting, A., Efurd, D., Finnegan, D. et al. Migration of plutonium in ground water at the Nevada Test Site. Nature 397, 56–59 (1999).

Tybo, Benham, Molbo, and Belmont are all code names for underground nuclear tests conducted by the United States government in Nevada.

Here, from the paper just cited, is a map of where the underground nuclear tests were conducted, as well as some cartoons diagramming the conditions under which the two tests in question were conducted.

The caption:

We will return below to the measurements reported in this 1999 paper, and compare them with results from other measurements of plutonium in ground water reported in 2020.

Here is a 2002 government report detailing some information about Tybo and Benham, also the topic of Dr. Kersting's 1999 paper: TYBO/BENHAM: Model Analysis of Groundwater Flow and Radionuclide Migration from Underground Nuclear Tests in Southwestern Pahute Mesa, Nevada

The text indicates that the inventory of residual plutonium in the Benham test is 1.5 moles of Pu240 and 17.1 moles of Pu239 in the glasses lining the nuclear test cavities. The molar ratio between the two isotopes is 8% Pu240, and 92% Pu239, where as the percentage of the observed radioactive decays for the two isotopes is 24% from Pu-240 and 76% from Pu-239. It is very difficult to imagine that the technology exists to isolate this residual plutonium from the Nevada National (In)security Site, but were it possible to do so, and were the composition of plutonium similar to that of the Benham test, it would be less than ideal for use in a nuclear weapon, workable perhaps, but very difficult to assemble because of the high neutron flux from Pu240 spontaneous fission.

This is a reason, by the way, why isolation of plutonium for the assembly of nuclear weapons involves the production of considerably more so called "nuclear waste" than is the case for the reprocessing commercial used nuclear fuels: To prevent the accumulation of Pu240, which tends to make nuclear weapons "fizzle" the fuel rods from the nuclear reactor must be removed after short irradiation times, when the plutonium in the fuel rods is still very dilute, and therefore more problematic to isolate.

I will now turn to a paper Dr. Kersting wrote (as the sole author) surveying migration of plutonium at several major sites where it is not in an engineered environment, that is, where it represents an environmental contaminant located without consideration of long term exposure. That paper is here: Plutonium Transport in the Environment (Annie B. Kersting, Inorganic Chemistry 2013 52 (7), 3533-3546)

Before turning to some of the graphics in the paper, it is useful to take a look at the degree of contamination at each of four nuclear weapons production sites discussed in the paper. (I emphasize that these are weapons sites, because, being poorly educated, unable to make distinctions, ignorant of historical context, indifferent to the observed - and growing death toll resulting from not using using nuclear power more broadly - anti-nukes love to call up reference to say, Hanford, and point to it, as if it is relevant to my position on nuclear power, sometimes with truly awesome levels of stupidity and selective attention: See below.) The weapons production sites, besides the aforementioned Hanford site and the Nevada National (In)security Site, are the Rocky Flats Site in Colorado, and the Soviet Era Mayak Site in the Modern Day Russian Federation.

To wit:

The Nevada National (In)security Site:

The former NTS, currently called the Nevada National Security Site, was the location of 828 underground and 100 atmospheric nuclear tests conducted between 1956 and 1992 as part of the U.S. nuclear testing program.(94) Approximately 4.8 × 1018 Bq (1.3 × 108 Ci, decay corrected to 1992) of radioactivity remains in the subsurface, consisting of fission products, activation products, actinides, and tritium.(94, 95) Approximately 3.1 × 1016 Bq (8.3 × 105 Ci) of radioactivity comes from Pu.(94) Greater than 95% of the residual Pu and other refractory radionuclides are sequestered in the melt glass that coalesces at the bottom of the cavity.(96, 40) As the glass alters, radionuclides are released and are potentially available for transport. For more information on the phenomenology of underground nuclear tests, see work by Kersting and Zavarin and references cited therein.(40)

The NTS is located in an arid desert environment with the majority of the nuclear tests detonated in alluvium or rhyolitic volcanic rock. Approximately one-third of the underground nuclear tests were detonated below the groundwater table, in rhyolitic tuff. The groundwater is deep, roughly more than 250 m bgs, and is predominantly sodium bicarbonate, low ionic strength, and low organic carbon with a pH of ?8.(97, 98) In the regions where groundwater flows through fractured volcanic rock, flow rates have been measured up to 80 m/year, whereas in the alluvial basins, groundwater flow rates are much slower, as low as 0.3 m/year.(99)

Rocky Flats:

Rocky Flats is located in the semiarid grasslands on the high plains of Colorado at the eastern edge of the Rocky Mountains. There are two distinct groundwater regimes at Rocky Flats. There is a deep groundwater, about 200–300 m bgs, which is isolated from the shallow groundwater. The shallow groundwater and surface water are inextricably linked because stream channels recharge the shallow groundwater while seeps discharge shallow groundwater to the surface.(102) The shallow groundwater has a pH that ranges between 7.5 and 9.9 and a DOM concentration between 3.6 and 14.0 mg of C/L. Concentrations of Pu in the surface waters ranged from 7.8 × 10^(–3) to 2.2 × 10^(–3 )Bq/L (from 0.21 to 0.06 pCi/L).(23)

The radioactivity described in the surface waters near Rocky Flats is not very impressive, although they surely would excite most anti-nukes into paroxysms of stupidity. 7.8 X10^-3 Bq means that one would need to wait more than two minutes (128 seconds) to observe a single nuclear decay of a single atom of plutonium in the water. One of the more abysmally stupid things that anti-nukes say is that "there is no safe level of radioactivity." This ignores the fact that potassium is an essential element, without which all life, including human life, would not exist on this planet, and that all of the potassium on earth - without extensive and hardly ever practiced laboratory processing - is radioactive, owing to the natural presence of potassium-40, (K-40) which has a half-life of 1.227 billion years. It is also worth noting therefore that when life evolved, potassium was more than twice as radioactive as it is now; life has always been bathed in radiation. A 70 kg human being contains about 140 grams of potassium (cf: Emsley, John, The Elements, 3rd ed., Clarendon Press, Oxford, 1998.) It is straight forward to calculate that the radioactivity associated with this potassium, given the isotopic fraction of K-40 in potassium, and its half-life. It works out to 4,250 Bq per body. Seawater, considering only it's potassium content and not the significant quantities of uranium and uranium decay daughters it contains, is more radioactive than Rocky Flats water, 1.29 X 10^(-2) Beq/liter.

Before being banned at Daily Kos for making a true statement, that statement being - I paraphrase - that "opposing nuclear energy is simply murder," I walked through some related calculations in a "diary" over there to show that the total radioactivity of seawater from potassium alone is about 2 X 10^22 or 530 billion curies: How Radioactive Is the Ocean? In the last 300,000 years, a fair portion of the existence of homo sapiens, the fraction of potassium-40 that has decayed is 0.000163. The sea has always been radioactive and always will be radioactive, and, without ending all life on Earth, nothing can be done about it. Of course, it is something of a quixotic enterprise to explain science to a journalist, in general, and the owner of Daily Kos is just that, a journalist, one on our side perhaps, in terms of supporting and electing Democrats, but completely ignorant of issues in Energy and the Environment.

Mayak:

The Mayak Production Association, located near the southern Ural Mountains in Russia, was built in 1948 to produce Pu for nuclear weapons. It consisted of five nuclear reactors and a reprocessing plant. The Mayak region has been severely contaminated due to both routine and accidental releases of radioactivity.(106) From 1949 to 1956, approximately 106 PBq (2.9 × 10^6 Ci) of nuclear liquid waste was intentionally discharged into the Techa River. Some of the more mobile radionuclides, such as 90Sr, have been detected more than 2000 km downstream.(106)

Starting around 1951, waste effluent was discharged into nearby Lake Karachai. This lake, which has no outlets, was originally an upland marsh with high organic content.(107) It is underlain by fractured andesitic to basaltic metavolcanics. The discharge waste effluents were weakly alkaline NaNO3 brine solutions with a pH between 7.9 and 9.3. Approximately 4440 PBq (1.2 × 10^8 Ci) of nuclear waste effluent containing 90Sr, 137Cs, 239Pu, and 241Am was discharged into Lake Karachai.(106) Concentrations of Pu in the waste effluent at Lake Karachai were approximately 1000 Bq/L (2.7 × 10^4 pCi/L). The Pu concentrations measured in groundwater collected from downgradient wells were 4.8 Bq/L at 0.5 km and 0.029 Bq/L (7.8 × 10^(–1) pCi/L) at 4.0 km distance.(25)

Novikov and co-workers showed that greater than 90% of the Pu detected 4 km from Lake Karachai was associated with the colloidal fraction of groundwater. They were also able to show that the Pu was associated with iron oxyhydroxide colloids. Particle-size analysis of these colloids demonstrated that between 70 and 90% of the Pu detected in the colloidal fraction was associated with the smallest size 3–10 kD filter (?1–15 nm). In the near field, the concentration of Pu both at Lake Karachai and in groundwater samples from the nearest wells exceeds the solubility of PuO2+x (s,hyd), favoring the formation of intrinsic Pu colloids. Understanding the processes controlling Pu transport from complex initial source chemistry to more than 4 km downgradient has yet to be fully determined

Hanford:

The Hanford Site was established in 1943 as part of the U.S. weapons program to produce Pu for nuclear weapons. Located in the semiarid, south-central Washington State, the Hanford Site is located on a sequence of unconsolidated fluvial and lacustrine deposits (sand, gravel, and silt) approximately 30–122 m thick. The sediments overlie the Columbia River basalt, and the water table is variable but estimated between 230 and 270 m bgs in the central areas.(109)

The Hanford Site produced about 67 t of Pu for use in nuclear weapons and was the site of the first (sic) nuclear reactor.(110) Separating Pu produced from the reactors and reprocessing the waste resulted in the discharge of large quantities of Pu and other actinides to the shallow subsurface.(111) Releases of radionuclides and hazardous chemicals occurred in a variety of different forms: solid waste in unlined and lined trenches, liquid waste in shallowly buried tanks, and accidental surface releases. The chemical composition of the waste was highly variable with extremes in the pH, salinity, radionuclide composition, and concentration. Approximately 2.4 × 10^8–0.8 × 10^8 GBq (6.5 × 106–0.22 × 10^6 Ci) of high-level waste was discharged to the vadose zone from planned and unplanned releases and leaking tanks, and of this, approximately 4.4 × 10^(5) GBq (1.2 × 10^4 Ci) of 239Pu was disposed of as liquid waste in the near surface.(111, 112) Pu was discharged to the shallow subsurface in over 80 separate locations, although the vast majority of the transuranics was disposed of in the central plateau (200 Area).

It is useful to translate the units of radioactivity used here, GBq (Gigabequerel) into mass. (Note that almost all of the plutonium at Hanford is, in fact, weapons grade plutonium, since that was what the site was constructed to make.) Using the standard nuclear decay formulae, which should be familiar to a good high school student, one can show that the specific activity of plutonium-239 is 2.29 X 10^(12) Beq/kg, or 2.29 TBq (Terabequerel/kg.) If follows that there is about 192 kg of weapons grade plutonium spread around the Hanford site in various places.

An important point at Hanford and Mayak, as opposed to Rocky Flats, to be discussed immediately below, and the Nevada National (In)security Site is that at the two former sites, the chemical form of the plutonium is varied, in some cases acidic, which can change the mobility.

A fairly comprehensive report on plutonium, americium, and neptunium contamination at Hanford is referenced by Dr. Kersting, a report, PNNL-18640, (Cantrell, 2009) which is available for free on line. A unit utilized in the report in many places is the pCi, the "picoCurie," which is equal to 0.037 Bq, implying that to observe one pCi, on average, one must wait a little under 30 seconds to observe the decay of a single atom. The report. A cubic meter of seawater will on average contain about 350,000 pCi of potassium-40. The conclusions of the report include the following remarks:

The primary reason that disposal of these large quantities of transuranic radionuclides directly to the vadose zone at the Hanford Site has not resulted in widespread groundwater contamination is that under the typical oxidizing and neutral to slightly alkaline pH conditions of the Hanford vadose zone, transuranic radionuclides (plutonium and americium in particular) have a very low solubility and high affinity for surface adsorption to mineral surfaces common within the Hanford vadose zone. Other important factors are the fact that the vadose zone is typically very thick (hundreds of feet) and the net infiltration rate is very low due to the desert climate.

In some cases where transuranic radionuclides have been co-disposed with acidic liquid waste, transport through the vadose zone for considerable distances has occurred. For example, at the 216-Z-9 Crib, plutonium-239 and americium-241 have moved to depths in excess of 36 m (118 ft) bgs. Acidic conditions and the presence of possible complexants could increase the solubility of these contaminants and reduce adsorption to mineral surfaces. Subsequent neutralization of the acidity by naturally occurring calcite in the vadose zone (particularly in the Cold Creek unit) appears to have effectively stopped further migration.

Anyway.

Often, when people come to me to display their intellectual laziness and/or ignorance by complaining about so called "nuclear waste," they often pull arbitrary numbers out of their hat about "how long" it's supposed to stay "dangerous." One hears numbers like "billions of years" (which is how long potassium and uranium and thorium all of which were on the planet when it formed are radioactive), or "millions of years" and sometimes "thousands of years," as if the carbon dioxide, mercury, and lead from dangerous fossil fuels disappear in minutes, even though their environmental lifetimes are essentially eternal.

In connection with the 2.8 tons of plutonium at the Nevada National (In)security Site it is useful for the purposes of this "too long to read" post what the decay timelines for the plutonium will be at the site.

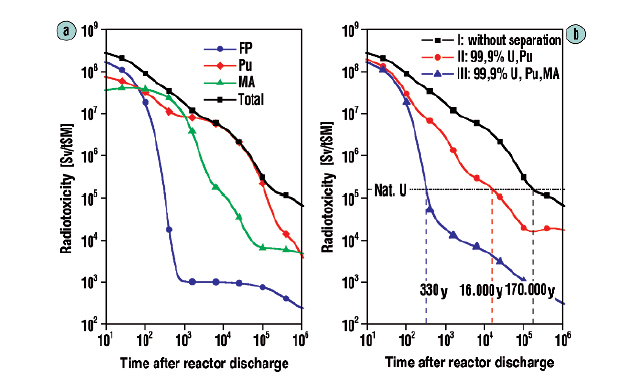

In the Inorganic Chemistry 2013 paper, Dr. Kersting provides a kind of graphic one sees in many contexts connected with the management of so called "nuclear waste," a comparison between radiotoxicity (by various means of biological transport) associated with the constituents of used nuclear fuels (or in this case weapons manufacture and/or nuclear testing) in comparison with that associated with natural uranium ores. It is important to note however, since Dr. Kersting is a geochemist concerned with the behavior of actinides and fission products in the environment, specifically in geological structures, she is primarily concerned with buried plutonium. Here it is:

The caption:

This graphic is reproduced from an original document here: Nuclear waste forms for actinides (Ewing, PNAS March 30, 1999 96 (7) 3432-3439)

Of course, the only "waste form" at the Nevada National (In)security Site, is glass formed from the heat of the explosion of a nuclear weapon underground. Below we'll consider how mobile this plutonium is. However, it is next to impossible to directly recover it in any way.

Using simple nuclear decay laws, the atomic weights of the two isotopes and the data discussed above for just one of the 828 underground nuclear explosions in Nevada, the Benham explosion, I have prepared the following table.

Although anti-nukes are prone to deny it, because they are most often discussing topics about which they know nothing, however, the situation is very different at weapons sites as opposed to commercial nuclear reprocessing sites. The recovery of the transuranium actinides using a wide variety of processes is well understood, and all of them, not just plutonium but also neptunium, americium, and curium are potential nuclear fuels. I therefore always argue that it is just stupid to bury them, as they are vital resources in a time of climate change and massive rising air pollution death rates.

The following figure shows the very different case obtained if one separates the uranium, plutonium and minor actinides (neptunium, americium and curium) and fissions them, whereupon the reduction of radioactivity to a level that is actually below that of the original uranium in a little over 300 years:

The caption:

(Hartwig Freiesleben, The European Physical Journal Conferences · June 2013)

Source 17, in German, is this one: Reduzierung der Radiotoxizität abgebrannter Kernbrennstoffe durch Abtrennung und Transmutation von Actiniden: Partitioning. Reducing spent nuclear fuel radiotoxicity by actinide separation and transmutation: partitioning.

It is important to note that simply because a material is radioactive does not imply that it is not useful, perhaps even capable of accomplishing tasks that nothing else can do as well or as sustainably. Given the level of chemical pollution of the air, water and land, in fact, the use of radiation, in particular high energy radiation, gamma rays, x-rays, and ultra UV radiation may prove to be more important than ever, but that's a topic for another time.

To return to the topic at hand, plutonium - which I regard as an essential element for a sustainable world - plutonium dioxide, plutonium form found very commonly in the environment, is an extremely insoluble compound. The concentration of plutonium in water over the oxide has been calculated to be 3 X 10^(-17) M. (cf. Haschke, Thermodynamics of water sorption on PuO2: Consequences for oxide storage and solubility (Journal of Nuclear Materials, Volume 344, Issues 1–3, 1 September 2005, Pages 8-12) One can back calculate what this represents in terms of radioactive decay, in units of Bq, again using the mass of 239Pu, the decay constant and Avagadro's number to see that this represents 1.65 X 10^(-5) Bq, meaning that if one were observing a liter of water saturated with PuO2, one would need to wait nearly 17 hours on average to observe even a single atom of plutonium decaying in the saturated water. This is way less radioactive than the natural radioactivity of seawater or, for that matter, human flesh.

But perhaps I'm being a little too glib here, since the paper cited at the outset of this post, co-authored by Dr. Kersting, who is way smarter than I am, contains the word migration, does it not?

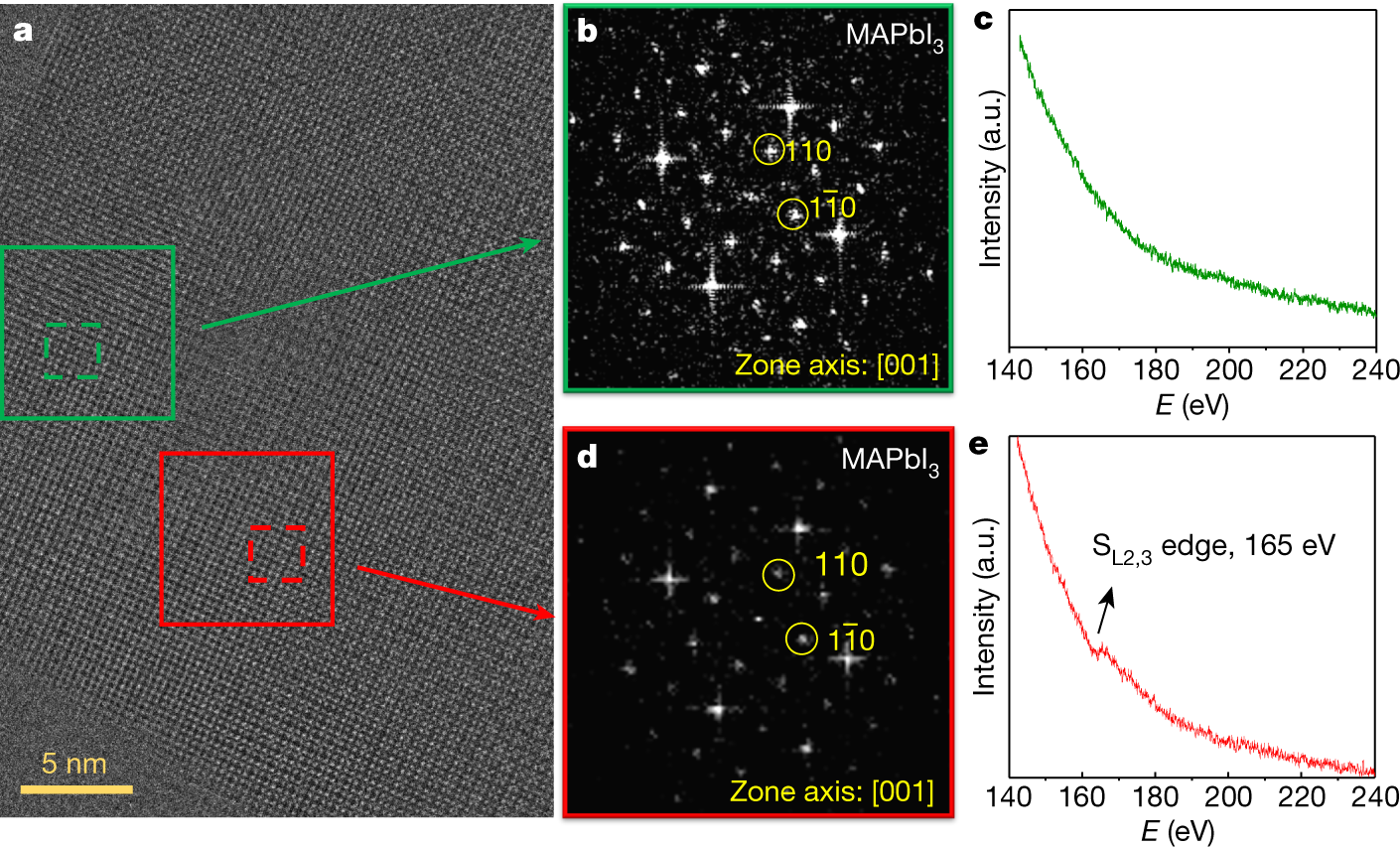

It turns out that there are other mechanisms for the transport of plutonium, and Dr. Kersting summarizes them quite well in her 2013 Inorganic Chemistry paper. The mechanism is transport on colloids. It is therefore useful to look a some pictures from Dr. Kersting's 2013 paper beyond that posted above:

The caption:

The caption:

The caption:

Another term for "Eh–pH diagram" is "Pourbaix diagram." This Pourbaix diagram reflects the very complex and extremely interesting redox chemistry of plutonium. Even were it not an element that will surely prove critical to saving humanity from itself, assuming that humanity does save itself, plutonium would be, and is, of extreme academic interest, being one of the very rare metals that can simultaneously exist at equilibrium in three oxidation states simultaneously. Just as the presence of oxygen in the planetary atmosphere plays a role in the fact that the oceans contain approximately 4.5 billion tons of uranium in equilibrium with the planetary mantle - the evolution of oxygen accounts for the events at Oklo - the varied oxidation states of plutonium can play a role in its solubility, at least under some circumstances, for example transport to colloid particles, although it's not clear that this is, in fact, how plutonium bearing colloids are formed.

More about the colloids:

The caption:

A real life plutonium bearing colloid from the Hanford site:

The caption:

Now I would like to return to the paper cited at the very outset of this long diatribe, to wit: Kersting et al., Environmental Science & Technology 2019 53 (21), 12238-12246.

In this paper, the authors took colloids formed using real melt glass, retrieved from real underground nuclear tests and stored at Lawrence Livermore National Laboratory, and prepared colloidal minerals (Montmorillonite Sw-y) which make up clay, to understand their ability to transport plutonium. Since, as described in the excerpt at the beginning of this dreary post, some of the nuclear test caverns remain thermally hot, and others are cool, the extraction was performed under conditions designed to mimic the range of these observed conditions. Then they set out to see how well the plutonium transported on colloids was subject to release.

The preparations of the colloids is described in a previous paper by Dr. Kersting and her coauthors, this one: Hydrothermal Alteration of Nuclear Melt Glass, Colloid Formation, and Plutonium Mobilization at the Nevada National Security Site, U.S.A. (Mavrik Zavarin, Pihong Zhao, Claudia Joseph, James D. Begg, Mark A. Boggs, Zurong Dai, and Annie B. Kersting, Environmental Science & Technology 2019 53 (13), 7363-7370) In order to transfer plutonium from the melt glass to the colloids, the experiments were conducted over a number of years, and measured the concentration of two fission products, Eu-152 and Cs-137, plutonium (239+240), and an activation product, Co-60, presumably formed by neutron capture in the iron of the steel casings of the test bombs.

The purpose of the paper cited at the outset of this unreadably long post is described by the authors at the end of the introduction, including some comments on the nature of the samples:

The authors then processed the colloids formed over a period of years as described in their earlier paper, and allowed water to flow over these colloids to see how much plutonium (and cesium) was released into in the process:

The conditions under which the desorption tests were conducted are shown in two tables from that paper

The "percent talk" in table 1 - which is often used misleadingly by advocates of so called "renewable energy" to conceal its failure to address climate change - looks rather frightening until one looks at absolute numbers, displayed by figure 1 from the paper:

The caption:

It is useful to convert the "molar" figure into Bq, using the specific activities (calculated from the decay constant in inverse seconds, not inverse years) of the two plutonium isotopes, 239 and 240 which, according to the graphic from Dr. Kerstings 1999 Nature paper, shown above, is approximately 1:1 for the Benham and Tybo nuclear weapons tests. One can calculate that the activity of Pu-240 at 2.5 X 10^(-13) M is about 0.1 Bq for Pu-239, and about 0.5 Bq for Pu-240; carrying through some non-significant figures, it works out to one nuclear decay every 7 seconds in the former case, 1 decay every 2 seconds for the latter case.

Two other related graphics from the paper:

The caption:

The caption:

Note that these papers refer to laboratory evaluations, and not to real world situations.

It is nevertheless interesting to compare the values herein with the EPA standards for drinking water, which for gross alpha contamination is 15 pCi/liter, which translates to 0.56 Beq/liter. It is important to ascertain what this standard means. It does not mean that if one drinks water exceeding this limit that one will immediately die, although in long experience in dealing with the mentality of anti-nukes, it would not surprise me to learn that people might so interpret it as such. If this were the case, people would be dropping like flies all over the United States.

Let's consider some real world situations. I live on the Reading Prong, a natural uranium formation, that produced a gasp of terror in the New York Times shortly before Chernobyl blew up, where the uranium is in secular equilibrium with its daughter elements, including radium-226. I have measurable Radon-222, the gaseous daughter of Ra-226 in my home on the lower level, happily below EPA recommended levels, although I have never checked my well water for Ra-226. The EPA guidance on drinking water specifically refers to this isotope, Ra-226. The EPA guidance is not a function of instantaneous death should one exceed it, rather is a function of the PDE (permissible daily exposure) and MDD (Maximum Daily Dose) and assumes that one is drinking water, and the exposure limit is very conservative 4 mrem/year. For perspective, a three hour ride in a commercial airliner exposes one to 1 mrem. I just had a CAT scan to evaluate some abdominal pain - happily it does not seem to involve cancer - and was exposed to about 2,000 mRem. Of course, the conservative drinking water standard is connected to ingested radionuclides which is different than whole body exposure, but again, we all experience internal radiation because our lives depend on having potassium in our bodies and all of the potassium on Earth is radioactive; this natural radiation amounts to about 33 mRem/year.

Following Dr. Kersting's papers on plutonium migration, the Lawrence Livimore National Laboratory published a broader survey of water at the Nevada National (In)security Site: Interpretation of Mineralogical Diagenesis for Assessment of Radionuclide Transport at Pahute Mesa, Nevada National Security Site It is open sourced; anyone can read it. (Dr. Kersting's papers are referenced in this report.) Nevertheless, for convenience I reproduce Figure 6 from the paper which compares the concentration of plutonium in test wells near nuclear test sites with the EPA gross alpha standard:

It appears that only one test well exceeds the EPA limits for drinking water, which is not to say that I recommend drinking well water from the Nevada National (In)security Site, on the other hand if one did, this data suggests the result would not be fatal.

Speaking of the "real world," while we all wait breathlessly for the grand so called "renewable energy" nirvana which has not come, is not here, and frankly, won't come, we are drilling the shit out of the North American continent, shattering rocks beneath it forever far beyond the Nevada National (In)security Site to get the last drops of oil and gas out them. The purpose of shattering the shit out of rocks, is of course, to increase their surface area. The Marcellus shale, near where I live, contains besides oil and gas, large amounts of uranium and its daughters, including radium-226 and radon-222. The rocks are shattered using high pressure water, and it happens that water extracts radium-226 and brings it to the surface in a solution of nasty things known as "flowback water," where it is dumped in a largely unregulated fashion.

A great deal has been published on the "NORM" (Naturally Occurring Radioactive Materials) in flowback water, and it is illuminating to compare the radiation levels associated with plutonium resulting from nuclear tests at the Nevada National (In)security Site with flowback water. For one example of such measurements, radiation in flowback water - there are oodles of them available for any interested party - consider this paper: Estimating Radium Activity in Shale Gas Produced Brine (Wenjia Fan, Kim F. Hayes, and Brian R. Ellis Environmental Science & Technology 2018 52 (18), 10839-10847. Three fracking sites are compared, one on the Marcellus Shale in PA, near where I live, and two in Michigan.

A graphic from that paper:

The caption:

There you have it. Samples of "flowback water" dumped on the surface in Michigan and In Pennsylvania are (with a few exceptions in this graphic) are more radioactive than samples of water obtained from test wells near the sites of historical nuclear weapons tests at the Nevada National (In)security Site. After a long life, I am certainly cynical enough to understand that the very same people who wait endlessly for the so called "renewable energy" nirvana that did not come, is not here and will not come, while we frack gas and dump the waste directly in the air or on the land (in flowback water retention ponds) will carry on endlessly about say, Fukushima while not giving a rat's ass about radium in Michigan and radium in PA that has been brought to the surface because so called "renewable energy" depends on continuous access to dangerous natural gas.

As I often say, "History will not forgive us, nor should it."

Much of what I've written about radiation above with respect to radiation exposure consists of something called "facts." Some of these facts have been well know for a very long time, for example about background radiation exposure, but over the last half a century, I've heard a great number of what can only be called "conspiracy theories" about radiation exposure that center around the premise, often spouted by anti-nukes, that there is "no safe level of radioactivity" despite the obvious statement, connected with potassium that there is no safe level of not having radioactivity exposure since potassium is an essential element for all living things.

Let me move away from physical science to a quasi "social science" discussion.

Back in 2018 I had the pleasure of attending a lecture that can now be viewed on line at the Princeton Plasma Physics Lab website. One of the slides on the big screen was a picture of Donald Trump. Here is a link to the place where the lecture can be viewed: Science on Saturday: Improbable Research and the Ig Nobel Prizes.

One of the featured Ig Nobel Prizes discussed in the lecture was the now somewhat famous "Dunning Kruger Effect," for which an Ig Nobel was awarded in 2000. The Dunning Kruger Effect is based on a study which concluded that people who are incompetent to comprehend a subject are incompetent to know that they are incompetent. The Science on Saturday lectures take place in New Jersey, a very reliable blue state, and when discussing the award to Dunning and Kruger - which the speaker indicated is actually awarded to stimulate thinking - the speaker flashed a big picture, with an appropriate deadpan pause, of Donald Trump, who at that point occupied the White House, with the result of a loud round of laughter from the audience.

Of course, Donald Trump, the very stable genius, is famous for making endless statements about multiple subjects that begins with the words, "Nobody knows more about..." [insert subject] "...than I do," where all of the subjects he named are subjects about which he knows very little, or, in fact, nothing at all. There are videos on the internet which consist entirely of chains of these statements; if you can tolerate hearing anything at all from this sad excuse for a human being you can Google your way to videos of tandem statements by this fool referring to various subjects.

You can also Google your way to lots of lectures by and interviews of the psychologist David Dunning describing the effect. One of the points he makes is that the effect is not limited to Donald Trump, although Donald Trump may be regarded as a kind of reification of the effect, but we all over estimate ourselves in certain areas. For example, something like 88% of people estimate that their own driving skills are in the 25th highest percentile.

One path to over-estimating oneself is to assume that someone who speaks or writes on a particular subject with an air, that is, an affectation, of authority is, in fact, an authority, and thus, falling uncritically into this assumption, end up parroting that person’s views as one's own. This is an example of the famous logical fallacies of appeal to authority and appeal to false authority.

If of course, one knows nothing at all about a subject, and encounters someone who claims to do so, irrespective of their actual knowledge of the subject, it is quite possible, particularly if one is credulous, to believe whatever that person says, particularly if they enjoy some measure of fame for their position.

I certainly have some experience with being credulous.

I first learned about nuclear power growing up on Long Island, NY, during the controversy surrounding the construction of the Shoreham Nuclear Plant, which, after several billion dollars were spent building it, never provided a watt of power to the grid on Long Island. (It briefly achieved low power criticality.) The local newspaper on Long Island, Newsday, was very hostile to the Shoreham Nuclear Plant, as well as to the company that was building it, LILCO, the (now defunct) Long Island Lighting Company. Another almost local paper that was, and still is, hostile to nuclear energy is of course, the national “paper of record,” The New York Times, which can still hold forth endlessly on Fukushima and Chernobyl but never to my knowledge, gives any consideration at all to the 18,000 to 19,000 people who died yesterday, and the additional 19,000 who are dying again today, and the additional 19,000 who will again die tomorrow, all dying, day to day to day to day from air pollution, 19,000 being, by the way, is more people than will have died yesterday, today, and will die tomorrow, from Covid-19.

It is interesting to note that parts of Long Island, notably its south shore area, are prone to being submerged as a result climate change, a topic never discussed by the journalists who carried on endlessly about evacuating Long Island as a result of a putative melt down of the plant. In fact, parts of the South Shore were submerged during hurricane Sandy. (The Shoreham nuclear plant was built on a bluff on the North Shore of the Island.) During the storm, a relative of mine on Long Island had to move his entire family to the attic of their home, where they all hunkered down while water came through the floor boards, but thankfully the water subsided before they all drowned.

Now, well into a long scientific career, and having, post-Chernobyl, having undertaken a fairly exhaustive study of nuclear technology in the primary scientific literature, I can and do frequently make the joke that one cannot be awarded a degree in journalism if one has passed a college level science course, but this certainly wasn’t my attitude when I was growing up. I generally believed journalists when they wrote about science, and probably the reason was that I didn’t know very much science. (Neither of my parents graduated from high school; one didn’t graduate from junior high school.) I actually thought journalists knew something about science.

In my late teens and early 20s, as I rebelled against my parents political conservatism in such a way as to assume that if a topic or belief was commonly and widely held by political liberals, as I a nouveau political liberal, I was required to regard it as the right - indeed the only - position to take, I was drawn to journalists who wrote with hostility to nuclear energy. As a result, in my 20’s, I certainly was drawn to radical anti-nuclear journalists. An example of such a journalist, which is not to say that I specifically recall reading any of the dangerous and deadly tripe he’s been handing out in a toxic life that has led to the deaths of millions of people from air pollution, would be this guy: Harvey Wasserman.

Speaking of widely held de rigueur beliefs on the left, returning to the happy report that I was banned from a liberal website, Daily Kos, for making a true statement, based on the work of the climate scientist, Jim Hansen, who was very popular as a climate scientist at Daily Kos, until he said something that didn't jive with our dogma, by showing, unequivocally, that opposing nuclear energy kills people. It is not true that everyone on the left accepts science irrespective of politics, but of course, it appears to me that neither Markos Moulitsas or Tim Lange (Meteor Blades on Daily Kos) could have possibly passed a college level science course, since both have degrees in journalism.

Again, and again, and again, "to the last syllable of recorded time:"

Prevented Mortality and Greenhouse Gas Emissions from Historical and Projected Nuclear Power (Pushker A. Kharecha* and James E. Hansen Environ. Sci. Technol., 2013, 47 (9), pp 4889–4895)

Harvey Wasserman, who knows no chemistry, no engineering (electrical or otherwise), nothing at all about the chemistry of semi-conductors, or the chemistry of batteries, who knows no physics, nevertheless felt qualified to write a book on how to save the world, Solartopia! Our Green-Powered Earth, A.D. 2030

It's 2021, nine years before 2030, the magical year Wasserman evoked in 2006. Humanity is now consuming over 600 exajoules of energy per year. Solar energy doesn't produce 5 of them. When the first edition (of which I'm aware) of Solartopia was published in March of 2006, the concentration of the dangerous fossil fuel waste carbon dioxide in the planetary atmosphere was 382.9 ppm. Yesterday, as of this writing February 3, 2021 it was 415.73 ppm (Accessed 02/04/21.) According to the World Health Organization web page, because 2.0 billion people lack access to basic sanitation:

Diarrhoea remains a major killer but is largely preventable. Better water, sanitation, and hygiene could prevent the deaths of 297 000 children aged under 5 years each year.

World Health Organization Fact Sheet

Solartopia indeed. (Bourgeois ethics can be appalling, can they not?)

Harvey Wasserman once put together a huge event in which prominent physicists and physical and semiconductor chemists, Bonnie Raitt, Jackson Brown, Crosby Stills and Nash, and Bruce Springsteen gathered in front of huge piles of electrically powered amplifiers, consuming thousands and thousands of watts, lit by powerful hundreds of thousand watt floodlights, to post equations to a huge screaming audience about "No Nukes."

Don’t worry. Be happy. Nothing to see here. No Nukes!

The famous rock guitarist guitarist and rock singer, Nobel Laureate Glenn Seaborg, chairman of the Atomic Energy Commission during the period when most of the US nuclear reactors were built and came on line, who discovered plutonium, americium, curium, berkelium, and californium, among other elements in the periodic table, and who advised every US President from Truman to Clinton, was not invited to the "No Nukes" concert, even though I'm sure that he could have lectured Bonnie Raitt on how to sing and how to play the slide guitar in a way that everyone would find appropriate, of course.

(For the record, I was a big fan of Bonnie Raitt's music, and when I was playing in clubs as a kid, a fair part of my repertoire, both in folk settings and in electric bands, consisted of interpretations of songs to which I was introduced by her records.)

Harvey Wasserman, impresario extraordinaire of "No Nukes" concerts is described, particularly by other journalists, as an "activist." Is this the same as expert? No, it isn't. He is an "expert" on nuclear energy in the same way as anti-vaccine "activist" former Playboy nude model and "actress" Jenny McCarthy is an expert on vaccines. Indeed, since nuclear energy saves lives that would otherwise be lost to air pollution Wasserman, like McCarthy applies his appalling ignorance to kill people.

Wasserman was born on New Year's Eve, 1945. It's surprising that he's not dead, since nuclear power didn't go away despite his best efforts, and of course, in 2030, if he isn't killed by plutonium atoms, he will be 85 years old. If he is still alive, and he may be, he will be another example of a petulant bourgeois airhead making glib promises about what future generations will do as an excuse for not be able to do what he predicts others will do himself. In 2030, the young men and women who were born in 2000, and who are in their early careers, will be living with fewer available resources, a vastly more damaged environment, and, oh yeah, abandoned solar cells that will have added to the existing intractable vast masses of electronic waste. Every twenty-five years, all of the infrastructure of so called "renewable energy" existing will need to be replaced in addition to any growth these unfortunate systems may experience.

Solartopia.

The actions of "activists" have consequences. In the 15 years since the first publication of Solartopia (which was in 2006 to my knowledge), somewhere between 90 million and 105 million lives were lost to air pollution. This is the equivalent of completely wiping out that so called "renewable energy" nirvana Germany, killing every man, woman and child, and then some. The concentration of the dangerous fossil fuel waste in the planetary atmosphere has risen by almost 33 ppm, or in the "percent talk" of "renewable energy" advocates, by 11.7% of the value in 2006.

Solartopia.

In the year that Harvey Wasserman was born, the very first plutonium based nuclear weapon was detonated in New Mexico, shortly after the first multi-kilogram quantities of the element had been synthesized and isolated from large quantities of uranium.

Nevertheless, Harvey Wasserman is still alive, despite the fact that during his life time, commercial nuclear power has grown from a rather primitive affair in the Manhattan Project era exclusively devoted to war, to a large commercial activity that represents the world's largest infinitely scalable source of carbon dioxide free energy ever developed. I guess, in spite of his massive paranoia, fed by his massive ignorance, nuclear power didn't wipe out the world, or even cause a death toll that approximates, even on a miniscule scale, the death toll associated with air pollution while we all wait, like Godot, through endless failed promises, for Solartopia..

Solartopia.

Above I made this statement: "Harvey Wasserman, who knows no chemistry, no engineering (electrical or otherwise), nothing at all about the chemistry of semi-conductors, or the chemistry of batteries, who knows no physics, nevertheless felt qualified to write a book..."

Given that I find Harvey Wasserman to be a disgusting excuse for a human being, a Jenny McCarthy of energy, is it really possible for me to assert what he does and does not know, given that I would be unwilling to spend more than 50 seconds in a room with him? It is true that when I was participating at Daily Kos, I had occasion to come across some of his writings there, and however far I went to endure them, I certainly can't recall any evidence that he knew any science, but am I being unfair? Is it possible that he, and Markos Moulitsas or Tim Lange have taken science courses and have passed them with a grade of C or better? Maybe. I see no evidence of it, but certainly it is possible. Am I being unfair?

I don't think so. I really don't have to study the knowledge base of Jenny McCarthy, who became an expert on autism, apparently, by being photographed naked in various provocative poses. If I really want to know anything about autism, I could alternatively - and I have actually done this - attend a lecture by Princeton Professor Sam Wang where he discussed the origins of autism, among other subjects.

Fairly or not - and I'm not inclined to be "fair" to either Jenny McCarthy or to Harvey Wasserman nor their many intellectual and moral equivalents - I see anti-vaxxers and anti-nukes as generic. Their beliefs - and they are nothing more than beliefs unconnected with facts - define completely enough who they are. At least to a first approximation, these beliefs also define what they know, to the point there can be no interest in further understanding how or why they came to their toxic, and frankly dangerous, ignorance. It is therefore a waste of time to get to the details of what they do and do not know.

On May 9, 2017, at Hanford one of two tunnels with some rail cars on which some retired chemical reactors utilized for the extraction of weapons grade plutonium from fuel rods collapsed. The tunnels were called the "Purex tunnels" after the PUREX process to isolate plutonium, invented by Nobel Laureate Glenn Seaborg, who never deigned to lecture Bonnie Raitt on how to play slide guitar. They were built in 1955, and were supported by steel and wood beams that severely corroded. A detailed engineering description of the tunnels can be found here: Purex Tunnel One Engineering Evaluation.

Ten days after the collapse the radiation levels in the area were measured. They are here: Sampling Data Purex Tunnels

If one reads through accounts of the response to this event, it becomes very clear that it involved tens of millions of dollars to address. From the radiation levels detected in the area, it's not immediately clear to me that even one life has been saved by the response, because the risk to human life at these levels is almost vanishingly small. It seems fairly obvious to me that if the same amount of money as has been spent on addressing the collapse of Hanford Purex Tunnel #1 had been spent building systems to handle human fecal waste for those who have no means of processing it, many more lives might have been saved than would have been lost if nothing had been done about the collapse of Purex Tunnel #1 beyond pouring concrete over it, if even that were worth it. In the actual event, well paid highly trained engineers, vast construction crews etc, etc expended their talents to solve the "catastrophe" of the tunnel collapse.

I have no idea how many opening posts I've authored at Democratic Underground and other blogs over the last two decades on various scientific subjects. Years ago, I might have recalled writing the bulk of them, but I no longer do. I am old, near the end of my life. It is clear that the overwhelming bulk of my scientific writings have concerned nuclear energy, all built around the oft repeated premise that nuclear energy need not be risk free to be vastly superior to all other options, including the wasteful and failed adventures in so called "renewable energy." The redundant tautological statement I often make is that "the only thing nuclear energy needs to be to be better than everything else is to be better than everything else which it is"

I would have never considered the collapse of Hanford Purex Tunnel #1, were my attention not drawn to it when a correspondent called up one of my old and forgotten posts on nuclear energy to sneer at me about it the "catastrophe" of a collapsed tunnel built in 1955 and largely forgotten. Of course, over the last two decades of blogging, I've seen lots of sneering at my position on nuclear energy, almost all of it abysmally stupid, but for some reason this particular sneer stuck in my mind with a mixture of amusement and disgust and perhaps more than a little horror, since air pollution kills and has been killing 6 to 7 million people per year for decades now while we all wait, like Godot, for the grand renewable energy nirvana that never comes and never will come. Air pollution killed more people today than any day of Covid-19 has killed on its worst day. It will kill more people tomorrow than Covid-19 killed on its worst day. Every day of this month, and next month, and the month after that, air pollution will kill more people than Covid-19 killed on its worst day.

But, at least in the mind of someone here, I needed to know, apparently, all about Purex Tunnel #1 at the Hanford nuclear weapons plant and now, and now, I do.

Solartopia.

I find all of the Harvey Wasserman wannabees tiresome, and after arguing - sometimes heatedly at no benefit to myself or to humanity since it is clear that no amount of information can change these people's minds; indeed they go full Qanon with conspiracy theories at times, I’ve decided simply to ignore them. You know how much... "there is no safe amount of radiation," and "government coverups" and related horseshit… can one take? I've learned to use here the tool not available at Daily Kos, the magnificent DU "ignore list." I am too old to spend too much time on stupidity.

Over the last few years, I've referred, with my penchant for sarcastic shorthand to the evocation of the collapse of Hanford's Purex Tunnel #1 as a kind of catch all for the intellectual (or anti-intellectual) myopia of anti-nukes, their curious, or better incurious, selective attention to the point of obliviousness. I obviously included the author of the evocation in question on my ignore list, but the evocation itself sticks somehow in my mind as an ultimate "head up the ass" statement, a kind of distraction from reality worthy of Fox News on some level. As such, I've referred to it from time to time in this space, and recently, when using a computer on which I declined to log in, I noted that one of my references to this evocation generated some accusatory commentary from a person on my ignore list. For old time's sake, I temporarily shrank my ignore list to remark as follows:

The response was this:

"I will say though, as the post about the collapsed tunnel was a perfect indication of the trivializing mentality of anti-nukes, their almost paranoid belief that if a single atom of a radionuclide is not permanently contained it will magically tunnel into their tiny brains and kill them, it stuck in my mind as a kind of symbol."

Total straw man.

Straw man? Should I be offended?

Let's leave aside the question of whether a lazy reference to a logical fallacy somehow matters in the context of comparison of the collape of Hanford's Purex Tunnel Number 1 to the death of almost 24 million people as of this writing from air pollution since the tunnel's collapse.

It gets better than this, because the Dunning Kruger effect is operative here because a concern about the collapse of Hanford's Purex Tunnel #1 certainly implies something, but perhaps the correspondent, not understanding a damn thing about the subject of the migration of radionuclides which I discussed at length above, the idiot raising the point is completely unaware of what is being implied by raising his or her point about the tunnel collapse on a planet where 18,000 to 19,000 people die every day from air pollution.

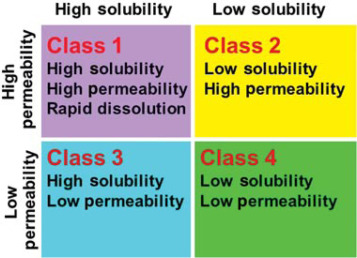

In the pharmaceutical industry there is a concept built around biologically active species known as the "BCS classification."

It is usually represented graphically:

We have already established that PuO2 has very low solubility above, placing it in either BCS Class II or Class IV, but what about it's permeability? Can we determine if it easily cross the membranes of the alimentary canal to get absorbed into the body?

The first known human ingestion of plutonium occurred on August 1, 1944, when a young chemist working on the Manhattan Project, Don Mastick had a vial, containing about 10 mg of plutonium chloride explode in his face with some of it landing in his mouth. (It was not, as reported by Eileen Welsome's otherwise wonderful book The Plutonium Files, the world supply. Gram quantities of plutonium were available by late 1944.) Mastick's stomache was pumped, and some of the plutonium he swallowed was recovered from his feces and urine. In urine, the concentration was extremely low, near the limit of detection, suggesting BCS Class IV. However much he actually absorbed is not precisely known, although his face and breath were radioactive for some time. He nevertheless lived to by 87, dying in 2007.

Many controlled animal studies, and, regrettably, unethical human studies - as detailed in Ms. Welsome's book - were also conducted in subsequent years, and indeed there have been a number of accidental ingestions of plutonium since Dr. Mastick's time.

Much of what we know about the permeability of plutonium comes from fallout from open air nuclear tests, particularly among Marshall Islanders. The "f1" value, absorption seems to be, for the (predominant) oxide to be on the order of 10^(-4) or 10^(-5). (cf. Alimentary Tract Absorption (f1 Values) for Radionuclides in Local and Regional Fallout from Nuclear Tests (Ibrahim, Shawki A.; Simon, Steven L.; Bouville, André; Melo, Dunstana; Beck, Harold Health Physics: August 2010 Volume 99 Issue 2 - p 233-251). This information places plutonium oxide in BCS class IV, which, in pharmaceutical settings is the most problematic class with which to deal. Overall, it means the blood levels from drinking well water at the Nevada National (In)security Site's worst contaminated water, the well water adjacent to at the Chancellor Nuclear Test location, about 55 pCi/liter, at something like 0.003 pCi, implies that one would need to wait about 6 minutes to detect a single nuclear decay from a single plutonium atom anywhere in the human body. This does seem to call into question whether a stupid person whining insipidly about "strawman" arguments while clearly lacking a shred of reasoning ability overall is in fact knowledgeable enough to assert whether my assertion about single atoms is "unfair," although, again, toxic thinking does not deserve fairness at all; sarcasm is entirely appropriate. Implications depend on perceptional ability, and not necessarily the raw value of a statement. If one does not understand what one is saying, one can and often is, a la Dunning-Kruger, unaware of implications.

Despite my disinclination to be "fair," in fairness, to some extent, the permeability is determined by oxidation state, and by complexation, but it is certainly the case that residual plutonium at the site of the Hanford Purex Tunnel #1 site is the oxide, particularly since the radioactivity at the site has been measured, and measured for a real hazard for incorporation of physiological plutonium, inhalation and found not to be elevated above background (See the link above). (The other is injection, or entry through a wound, the latter a situation that may have been obtained in the only nuclear war ever observed, which took place in 1945, over 65 years ago. It would be a sane world in which we spent as much time worrying about oil wars, and the diversion of petroleum products to weapons of mass destruction, as we do to preventing nuclear wars, since nuclear wars are no longer observed, even when certifiably insane people like, say, Donald Trump, have access to such weapons.)

The distribution of plutonium in organs and pharmacokinetics (DMPK) has been studied in mice, using a soluble form of the element, including the nitrate, and the two viable routes of administration, inhalation and injection settings:

(cf. Table 2, Waylon Weber, Melanie Doyle-Eisele, Dunstana R. Melo & Raymond A. Guilmette (2014) Whole-body distribution of plutonium in rats for different routes of exposure, Int J of Radiation Biology, 90:11, 1011-1018).

A knowledgeable person could argue that in the Purex process, plutonium is present as a (soluble) nitrate, however, the process relies n precipitating the nitrate as an oxalate, which on occasion spontaneously decomposes to the insoluble dioxide. This was almost certainly a problem, often an intractable problem, in the 1950's, when the chemical reactors in Hanford Purex Tunnel #1 were discarded there, and it may be in fact, the reason they were placed there. I have no idea. I do know that up to this day, the issue of the formation of the oxide from the oxalate is still the subject of research: A review of plutonium oxalate decomposition reactions and effects of decomposition temperature on the surface area of the plutonium dioxide product R.M. Orr, H.E. Sims, R.J. Taylor, Journal of Nuclear Materials, Volume 465, 2015, Pages 756-773.

It is very clear that any plutonium remaining on these reactors is in the form of the insoluble dioxide, which is why, in all of the literature and reports on the subject of this super tragic even, the collapse of a 20 meter section of Hanford Purex Tunnel #1, there is no discussion of plutonium.

So what is discussed in this adventure on which millions of dollars have been spent without any evidence that any lives would have been lost without such an expenditure?