NNadir

NNadir's JournalGlobal groundwater wells at risk of running dry

The paper I'll discuss in this post is this one: Global groundwater wells at risk of running dry (BY Scott Jasechko and Debra Perrone Science 372, 418–421 (2021) 23 April 2021)

A news item referring to the paper in the same issue of Science which is likely to be open sourced is here: The hidden crisis beneath our feet (James S. Famiglietti1,2, Grant Ferguson, Science 23 Apr 2021: Vol. 372, Issue 6540, pp. 344-345)

If memory serves me well, I have referred a number of times to a lecture I attended by Dr. Robert Kopp of Rutgers University, this one: Science on Saturday: Managing Coastal Risk in an Age of Sea-level Rise, wherein, in answer to a question from the audience (mine), Dr. Kopp explained that about 10% of sea level rise can be attributed to water pumped out of the ground and allowed to evaporate. Most of this water is used for agriculture, but it is also used in cities and in homes. I am guilty here. The water in my home is well water, which as I happen to know, contains some interesting and unpleasant substances.

This suggests - I've been musing about this a lot lately - that one path to addressing climate change would be restoring shorelines would include refilling the aquifers we've drained in the last 50 years or so in yet another expression of our accelerating contempt for all future generations, along with dried up lakes like Owens Lake in California, and dying bodies of water like Lake Baikal, the Aral Sea, the Caspian Sea, etc...

My most recent episode of such musings out loud is here: Ion-capture electrodialysis using multifunctional adsorptive membranes, which is preceded by a long dull digression on reactor physics and the mercury content of the oceans.

To anyone who argues that refilling ground water by desalination of ocean water, refilling dead lakes, dead seas etc. is geoengineering, let me say this.

There is a television show on the Science channel called Engineering Catastrophes which I watch from time to time to give me ammunition to make fun of my son the engineer. It's all about failures of bridges, buildings, roadways, etc. from things like hydrogen embrittlement, improper analysis of stresses, poor materials etc. However everything on this show is small potatoes compared to the biggest engineering catastrophe, the existing haphazard, uncontrolled and unplanned geoengineering of the entire planet, most of which has been a catastrophe of the last one hundred years.

This is not really a water problem; it is an energy problem. No, wind turbines in lobster fisheries off the coast of Maine, nor any other wind schemes designed to industrialize wilderness, will not produce even a fraction of the energy required owing to thermodynamic, mass intensity, reliability and lifetime considerations. The sooner we lose our affection for this nonsense, the more hope we will have.

The goal of geoengineering should be to restore, and nothing more.

Anyway future generations are in a world of hurt according to this paper. From the paper's introduction:

When groundwater wells run dry, one common adaptation strategy is to construct deeper wells (11). Deeper wells tend to be less vulnerable than shallower wells to climate variability (12) and groundwater level declines (13), but even relatively deep wells are not immune to long-term reductions in groundwater storage. Despite the role of wells as a basic infrastructure used to access groundwater, information about the locations and depths of wells has never before been compiled and analyzed at the global scale. Analyzing groundwater resources at the global scale is becoming increasingly important because of groundwater’s role in virtual water trade, international policy, and sustainable development (14, 15). Nevertheless, we emphasize that groundwater is a local resource influenced by hydrogeologic conditions, water policies, sociocultural preferences, and economic drivers.

Here, we compiled ~39 million records of groundwater well locations, depths, purposes, and construction dates (supplementary materials section S1), which provide local information at the global scale. These observations promote a better understanding of spatiotemporal patterns of well locations and depths (16, 17). Groundwater data are notoriously difficult to collect and collate (18, 19). The ~39 million wells are situated in 40 countries or territories that represent ~40% of global ice-free lands (average data density of 0.7 wells/km2; Fig. 1). Half of all global groundwater pumping takes place within our study countries or territories [fig. S2, reference (2), and supplementary materials section S5.1], which are home to >3 billion people (table S1).

Fig. 1 Groundwater well depths in countries around the globe.

The caption:

The dataset contains ~39 million wells in 40 countries or territories (see inset map). Blue points mark shallower wells and red points mark deeper wells in Canada, the United States (white areas mark states where data are unavailable), and Mexico (A); Argentina (partial coverage), Bolivia (partial coverage), Brazil, Chile, and Uruguay (B); Iceland, Portugal, Spain, France, Germany (partial coverage), Denmark, Sweden, Norway, Italy, Slovakia, Slovenia, Belgium, Poland, Latvia, Estonia, Czech Republic, Hungary, United Kingdom, and Ireland (C); Namibia and South Africa (D); Thailand and Cambodia (E); and Australia (F). National-level analyses are available in figs. S37 to S435.

Fig. 2 Fraction of areas with deeper well drilling versus shallower well drilling for five time periods among study countries.

The caption:

Well-deepening trends are more common than well-shallowing trends for the majority of time periods for the majority of countries (national-level analyses in figs. S37 to S435). (A) The fraction of 100 km by 100 km study areas where wells are being drilled deeper over time (Spearman ? > 0; regression of well completion depth versus well construction date). Each diamond represents well construction depth variations over time for a given country (e.g., blue diamonds are regressions for years 2000–2015; see legend above figure); some points overlap. (B to L) Spearman ? determined by regression of well depth versus construction date for the time interval 2000–2015 in 11 countries [i.e., the fraction of all 100 km by 100 km areas in these maps with Spearman ? > 0 correspond to the blue diamonds in (A)]. Blue shades mark 100 km by 100 km areas where wells are being constructed shallower over time (i.e., Spearman ? < ?0.1); orange and red shades mark 100 km by 100 km areas where wells are being constructed deeper over time (i.e., Spearman ? > 0.1.)

Fig. 3 Well construction depth trends at locations close to ( 0) significantly (Spearman P < 0.05) over time.

The caption:

An excerpt of the conclusion of this short paper:

...Groundwater wells supply water to billions of people around the globe (2, 13, 14, 36). Groundwater depletion is projected to continue in some areas where it is already occurring, and even expand to new areas not yet experiencing depletion (37)...

...Our work highlights the vulnerability of existing wells to groundwater depletion because (i) many wells are not much deeper than the local water table, making them likely to run dry with even modest groundwater level declines (supplementary materials section S6), and (ii) deeper well construction is common but not ubiquitous where groundwater levels are declining...

History will not forgive us, nor should it.

Have a nice weekend.

A day in the life.

The rate of denovo genetic mutations in the children of parents exposed to Chernobyl radiation.

The paper I'll discuss in this post is this one: Lack of transgenerational effects of ionizing radiation exposure from the Chernobyl accident M. Yeager et al., Science 10.1126/science.abg2365 (2021).

This week marks the 34th anniversary of the steam explosion at the Chernobyl Nuclear Power Plant. The graphite moderator in plant burned for weeks and much of the inventory of volatile fission products was released into the environment, and detected in easily measurable amounts all across Europe, as far away as Scotland.

This event garnered huge attention at the time and still garners significant attention.

It is reported that about six to seven million people die each year from air pollution, meaning that about 200-250 million people have died from air pollution since the explosion of the nuclear reactor, proving in the minds of many that nuclear power is "too dangerous," while apparently the combustive use of fossil fuels and biomass, responsible for these 200-250 million deaths, is not "too dangerous." Neither is climate change "too dangerous." These facts, deaths from air pollution, and the acceleration of climate change despite endless talk about and expenditures on so called "renewable energy," unlike Chernobyl do not garner huge attention.

Go figure.

From the introduction to the paper:

Only recently has it been feasible to comprehensively investigate DNMs genome-wide at the population level in humans by whole-genome sequencing (WGS) of mother/father/child trios. Recent reports of human DNMs characterized by WGS of trios estimate between 50 and 100 new mutations arise per individual per generation (2, 4–8), consistent with the population genetic estimate that the human mutation rate for single-nucleotide variants (SNVs) is approximately 1×10?8 per site per generation (9, 10). The strongest predictor of DNMs per individual is paternal age at conception (2–6, 8) with an increase of 0.64-1.51 per one-year increase in paternal age (6, 8, 11) while a maternal effect of approximately 0.35 per one-year increase in age was observed (6, 8, 12). Transgenerational studies of radiation exposure have primarily focused on disease (cancer, reproductive, and developmental) outcomes and have reported inconclusive results (13, 14).

Exposure to ionizing radiation is known to increase DNA mutagenesis above background rates (15, 16). Animal and cellular studies suggest high doses of ionizing radiation can lead to DNMs in offspring, particularly through double-stranded breaks (13, 17). Human studies have sought a biomarker of prior radiation injury (13, 18, 19), but have examined a small number of minisatellites and microsatellites, yielding inconclusive results (20–23). A WGS study of three trios from survivors of the atomic bomb in Nagasaki did not reveal a high load of DNMs (20), while a single-nucleotide polymorphism (SNP) array study of 12 families exposed to low doses of Caesium-137 from the Goiania accident in Brazil reported an increase in large de novo copy-number variants (24). No large comprehensive effort has explored DNMs genome-wide in children born from parents exposed to moderately high amounts of ionizing radiation yet possible genetic effects have remained a concern for radiation-exposed populations, such as the Fukushima evacuees (25).

Herein, we examine whether rates of germline DNMs were elevated in children born to parents exposed to ionizing radiation from the 1986 Chernobyl (Chornobyl in Ukrainian) disaster, where levels of exposure have been rigorously reconstructed and well-documented (26). Our study focused on children born of enlisted cleanup workers (“liquidators”) and evacuees from the town of Pripyat or other settlements within the 70-km zone around Chernobyl Nuclear Power Plant in Ukraine (27) after the meltdown, some of whom had extremely high levels of radiation exposure and several of whom experienced acute radiation syndrome. We performed Illumina paired-end WGS (average coverage 80X), SNP microarray analysis, and relative telomere length assessment on available samples from 130 children from 105 mother-father pairs. The parents had varying combinations of elevated gonadal ionizing radiation exposure from the accident (tables S1 to S3), and included a combination of exposed fathers, exposed mothers, both parents exposed and neither parent exposed (27)...

Some figures from the paper:

The caption:

Analyses are presented by increasing paternal and maternal age at conception, paternal and maternal dose, birth year of child, and paternal and maternal smoking at conception. All plots are univariate and do not account for other potentially correlated variables (for example, maternal age does not account for high correlation with paternal age).

The caption:

n = number of children sequenced (adapted from (39)). Liftover was used to convert coordinates to hg38 for all studies and the reference for CpG sites were defined with respect to that reference sequence. Only autosomes were included. Error bars show binomial 95% confidence intervals. Studies included Kong (2); Wong (8); Francioli (4); Michaelson (3); Jónsson (6); and Chernobyl (present study).

Some additional text:

Our study evaluated peripheral blood from adult children conceived months or years after the Chernobyl accident, which limited the ability to assess exposure closer to conception; however, there was no evidence of notable differences in DNMs in children born the following year (1987). Since these families were recruited several decades after the accident, we acknowledge potential survivor bias among sampled children, although this is unlikely since there is no consistent demonstration in humans of sustained clinical effects of preconception ionizing radiation exposure (36)...

....Although it is reassuring that no transgenerational effects of ionizing radiation were observed in adult children of Chernobyl cleanup workers and evacuees in the current study, additional investigation is needed to address the effects of acute high-dose parental gonadal exposure closer to conception. The upper 95% confidence bound suggests the largest effect consistent with our data is 1 Gy), but lower maternal dose was not associated with elevated DNMs, consistent with animal studies (13). Furthermore, our analysis of 130 adult children from 105 couples using 80X coverage of short-read technology suggests that if such effects on human germline DNA occur, they are uncommon or of small magnitude. This is one of the first studies to systematically evaluate alterations in human mutation rates in response to a man-made disaster, such as accidental radiation exposure. Investigation of trios drawn from survivors of the Hiroshima atomic bomb could shed further light on this public health question

For the record, I am an older father, and therefore there is a higher probability that my sons have DNM's than there may be to the children of men who were younger than I was before coming a father. If so these were happy mutations, since my sons are better men than I was at their age. I love my mutant boys.

Have a nice day tomorrow.

A daughter decides to do an impression of her mom working from home in the age of Covid.

Sign up for a lecture by Amartya Sen, "Attacks on Democracy: Challenges and Solution" at eCornell.

eCornell: ATTACKS ON DEMOCRACY Challenges and Solutions With Amartya Sen

Brief Excerpts from the Wikipedia page about him:

He is currently a Thomas W. Lamont University Professor, and Professor of Economics and Philosophy at Harvard University.[4] He formerly served as Master of Trinity College at the University of Cambridge.[5] He was awarded the Nobel Memorial Prize in Economic Sciences[6] in 1998 and India's Bharat Ratna in 1999 for his work in welfare economics. The German Publishers and Booksellers Association awarded him the 2020 Peace Prize of the German Book Trade for his pioneering scholarship addressing issues of global justice and combating social inequality in education and healthcare...

...Sen's interest in famine stemmed from personal experience. As a nine-year-old boy, he witnessed the Bengal famine of 1943, in which three million people perished. This staggering loss of life was unnecessary, Sen later concluded. He presents data that there was an adequate food supply in Bengal at the time, but particular groups of people including rural landless labourers and urban service providers like barbers did not have the means to buy food as its price rose rapidly...

...On one morning, a Muslim daily labourer named Kader Mia stumbled through the rear gate of Sen's family home, bleeding from a knife wound in his back. Because of his extreme poverty, he had come to Sen's primarily Hindu neighbourhood searching for work; his choices were the starvation of his family or the risk of death in coming to the neighbourhood. The price of Kader Mia's economic unfreedom was his death. Kader Mia need not have come to a hostile area in search of income in those troubled times if his family could have managed without it. This experience led Sen to begin thinking about economic unfreedom from a young age.

In Development as Freedom, Sen outlines five specific types of freedoms: political freedoms, economic facilities, social opportunities, transparency guarantees, and protective security...

These eCornell lectures are quite wonderful. The last one I attended featured a conversation with Bill Clinton on exactly this subject, saving democracy...

I don't plan to miss this one.

Go ahead, I dare you, ask for time on Badger and Grizzly...

I'm not sure that this is acceptable on a family blog, but here goes:

I came across this text today:

Therefore, we propose a total one-year allocation of 5,050,000 CPU-hrs on Badger and Grizzly:

25,000 for (1) + 25,000 for (2) + 5,000,000 for (3). This should be divided into 50,000 CPU-hrs on Badger

for (1) and (2) and 5,000,000 CPU-hrs on Grizzly for (3). Total data storage needs are 10 TB in Lustre

scratch space and Campaign Storage space. Many of the large trajectory files may be deleted after

publication of the resulting paper; hence, we do not need as much archival storage space. We ask for 5 TB

Archival Space.

It's from here:

Salts in Hot Water: Developing a Scientific Basis for Supercritical Desalination and Strategic Metal Recovery

Badger and Grizzly indeed...

In the battle against senility, I've managed to smoothly and correctly spell "pyrolysis."

I don't know why, but for the last several years, whenever I go to spell the word "pyrolysis," I find myself typing pyrrolysis, pyrollysis, pyrrollysis, etc.

I've noticed that in the last several months, I'm spelling the word correctly every time.

When I was a kid, I thought I was a candidate for spelling bee competitions. The invention of the computer disabused me of that notion, but now, near the end of my life, I've managed to correctly spell, every time, this word, which I run across several times a week, sometimes, like today, ten or twelve times in a single day.

Ion-capture electrodialysis using multifunctional adsorptive membranes

The paper I'll discuss in this post is this one: Ion-capture electrodialysis using multifunctional adsorptive membranes (Adam A. Uliana, Ngoc T. Bui, Jovan Kamcev, Mercedes K. Taylor, Jeffrey J. Urban, Jeffrey R. Long Science 16 APR 2021 : 296-299)

Continuous processes are generally economically and environmentally superior, in general, to batch or discontinuous processes. Highly efficient processes are economically and environmentally superior to inefficient processes.

In the case of energy generation, however, demand is highly variable, albeit in a fairly predictable way, particularly in the case of electricity, where a profound material and thermodynamic penalty is associated with storage, including the much hyped use of batteries which are often presented as a cure all for the profound environmental flaws of so called "renewable energy." From my perspective - and I'm not shy about stating it at all - batteries will make the already onerous environmental cost of so called "renewable energy" worse, not better, and will cause the already rapidly deteriorating situation with respect to climate change accelerate ever faster. A battery is a device that wastes energy, and in particular, the energy it wastes is already a thermodynamically degraded form of energy, electrical energy. The production of energy in any form will never, under any circumstances involving any device, risk free nor will it be completely and totally environmentally neutral. Thus wasting energy is a very bad, and frankly, dangerous idea.

Period.

One of the serious environmental and economic drawbacks of so called "renewable energy" is that it is discontinuous by nature, and the discontinuities are entirely disconnected from demand, and not really predictable, even in the solar case where one can easily construct tables of sunrises and sunsets for any place on Earth as far into the future as one wishes. This often results in electricity being worthless, in such a way that no producer of energy can recover the costs of their infrastructure. This fact accounts for the fact that countries heavily dependent on so called renewable energy - which is advertised disingenuously as being "cheap" - have the highest consumer prices in the OECD.

Famously or infamously for anyone who has suffered exposure to my position on energy and the environment, I am an advocate of nuclear energy, which I regard as the only sustainable and environmentally acceptable form of energy there is.

There is a problem with existing nuclear infrastructure however that needs to be discussed, and which is obvious to anyone taking a sober and honest look at it.

One hears from time to time claims about nuclear plants that could be designed to follow demand loads for electricity - a friend of mine often points out that this is already done on nuclear submarines - as if electricity should continue to be the product, as it has been for more than half a century, of a nuclear plant; we call them nuclear power plants after all. In terms of capacity utilization, the fraction of energy produced compared to the amount of energy that theoretically could produced over a period of time with a plant running at 100% of capacity, nuclear energy is the best there is, with most plants around the world operating at 90% or better capacity utilization. Of course doing so depends on the baseload demand being high enough to accept the output of the plant. If electricity is being dumped on to the grid because the sun is shining brightly and the wind is blowing at just the right speed, all of the electricity being produced at a baseload plant like a nuclear plant as well as the electricity being produced by the solar and wind facilities is worthless. This uniformly negatively impacts nuclear economics, coal economics, petroleum economics, gas economics, hydroelectricity economics, and for that matter, solar and wind economics. It also raises - this cannot be stressed enough - the external costs, the costs to the environment, and more subtle but nonetheless real health costs of energy.

Even if it can be done, feathering nuclear plants to match low demand, there are some technical issues that need consideration. The most famous and well known of these in the nuclear engineering community concerns the fission products having a mass number of 135, in particular the iodine-135 and xenon-135 species in the decay chain, both of which are highly radioactive, having half-lives respectively of 6.58 hours and 9.18 hours. This "problem" was recognized during the Manhattan project - where it was managed by engineers and scientists who lived and died by the use of ancient and marvelous devices known as slide rules - and it is relevant today, when accumulations of these isotopes can be modeled to any degree of precision and accuracy required by ever improving computer power. It played a role in the very stupid decisions made by the night crew operating the Chernobyl nuclear reactor that was famously destroyed by a steam explosion.

Xenon-135, with the exception of a very rare and difficult to produce isotope of zirconium (Zr-88) , has the highest neutron capture cross section of any known nuclide. When a nuclear reactor is operating at full power, a situation known as "Bateman equilibrium" exists, during which the Xenon-135 is being destroyed, either by absorbing a neutron to give non-radioactive and economically valuable Xe-136, or by decaying to radioactive Cs-135, as fast as it is being formed. Thus at a given level of power, the yield of a fission product is more or less constant, whereas the amount of material decay rises with its total mass.

The Bateman equations are a series of differential equations that can be solved numerically, and, apparently, exactly.

Here is a somewhat more sophisticated analysis of the Bateman equation as it applies to xenon-135: Solving Bateman Equation for Xenon Transient Analysis Using Numerical Methods (Ding, MATEC Web of Conferences 186, 01004 (2018))

The Bateman equation for xenon is this:

The first term in this equation refers to the fission yield of a nuclide, in this case, Xenon-135. The second term represents the amount that is being produced by the decay of iodine-135 (which has its own Bateman equation). The third term represents the radioactive decay of xenon-135. The fourth term represents the loss of radioactive xenon-135 when it absorbs a neutron and is converted into non-radioactive (stable) xenon-136, something that no other nuclide (except Zr-88) has as high a probability of doing.

The symbol ? in this equation's first and fourth terms is the symbol for the neutron flux, the number of neutrons flowing through a unit of area when the system is critical.

When a reactor shuts down, ? goes essentially to zero (ignoring trivial spontaneous fission), the neutron capture portion of the equation is no longer present, and neither is the fission yield portion of the equation. The Bateman equilibrium thus shifts in such a way that xenon-135 accumulates according to the second and third terms, and the amount of xenon-135 present begins to rise, having a longer half-life than it's iodine-135 parent. At some point iodine-135 depletes to a level such that the amount of xenon-135 accumulated is decaying as fast as it is formed. Thereafter its concentration begins to fall. It is problematic to restart a nuclear reactor until enough of the xenon-135 decays to the level found in the Bateman equilibrium that exists during full power operation. Thus for "normal" electricity generation nuclear reactors, shutting them down leads to a lag time between shutdown and restart, meaning that the power is not available to meet increased demand for several hours.

Consideration of this fact is what led the fools operating the failed Chernobyl reactor to violate protocol and pull all of the control rods out of the reactor to conduct their "test" with all safety systems disabled. It was, um, a bad idea.

It is worth noting by the way that Bateman equilibrium can be reached by any radioactive nuclide, including all of the radioactive fission products, and all induced radioactive materials, a fact that escapes most critics of nuclear energy while making insipid comments about so called "nuclear waste," this while ignoring the far more serious issue of dangerous fossil fuel waste. There is an upper limit, depending on power levels, to the mass of radioactive materials that it is possible to collect, whereupon the amount of fission products cannot rise. (It can be shown that this limit is actually approached asymptotically.) This is a situation that does not apply to dangerous fossil fuel waste, which is far more massive, and in fact, unlike used nuclear fuel, impossible to contain.

Anyway.

Another factor seldom mentioned when one considers shutting down a nuclear reactor because of low demand is far more subtle, and rarely, if ever discussed: Doing so wastes neutrons. Neutron economy is a key to saving the world. Nevertheless, this point is so subtle I won't discuss it further.

The point of all these mouthfuls is that the best way to operate a nuclear reactor is flat out, at maximum power, continuously. In my view however, it is wasteful to design, build and operate nuclear reactors solely to produce electricity, again, a thermodynamically degraded form of energy. Rather - again my opinion - it would be useful to generate electricity as a side product of a form of energy I have just got done criticizing, that is as stored energy, specifically, chemical energy. Forms of stored chemical energy include the energy dangerous fossil fuels, which is stored solar energy stored over many thousands of millennia (but burned in just a few centuries), as well as...wait for it...the energy in batteries.

Batteries...batteries...didn't I just get done saying batteries suck?

Indulge me...

Modern nuclear reactors, as Rankine cycle devices (for the most part) operate at a thermal efficiency of around 33%, which - this is hardly the first time I've pointed this out - is lower than the thermal efficiency of a combined cycle natural gas plant, many of which operate at thermal efficiencies of around 50%, sometimes even more.

As one should learn in a good high school science class, theoretical thermal efficiency - Carnot efficiency - is the highest for devices that operate at the greatest difference between the temperature of the heat source and the heat sink. If one follows the link just shown for "Carnot Efficiency" one can calculate the theoretical efficiency of a heat engine running between the boiling point of strontium metal, 1650 Kelvin, and "room temperature," generally taken as 298 Kelvin, for the heat sink - the Kelvin heat scale, where absolute zero is zero is required for thermodynamic calculations - to learn that in theory a heat engine operating over this range would have a maximum efficiency of 81.94%. In practice, the actual efficiency of a device operating at these temperatures would be lower, but it is impossible for it to be higher. The deviations from ideal Carnot efficiency and the real efficiency of real heat engines is concerned with the fact that no material is truly adiabatic - all substances conduct some heat (otherwise well insulated houses would not require heating systems) - and no heat exchange process will be reversible, entropy free.

This said, the rejection of heat can be minimized, particularly at high temperatures, particularly in situations where entropy is minimized, not eliminated (which is impossible) but minimized. This is the idea behind heat networks.

The following commentary is very, very crude, and is not intended to be accurate but rather illustrative.

Consider a device with boiling strontium metal at 1650K at 101,000 Pascals of pressure. In theory, assuming that all material science issues are addressed, the strontium gas (thermal energy) could expand against a turbine and produce mechanical work that could be used to produce mechanical work which could then be utilized to turn a generator to produce electricity, and the electricity could be converted in a battery to stored chemical energy that would then be available to be reconverted to electrical energy to drive a motor to produce mechanical work. Seven conversions of one form of energy into another are involved in this process that could power, say, one of those Tesla cars for millionaires and billionaires. The theoretical maximum Carnot efficiency, using the calculator linked above, and assuming the low temperature reservoir is 1100K, 50K above the melting point of strontium metal (1050K), is 33%. If the turbine process is 33% efficient, the generation and transport of the electricity is 90% efficient, the charging of the battery is 90% efficient and the conversion of the chemical energy in the battery is to electricity is 90% efficient, and the electric motor is 90% efficient than the overall efficiency is 0.9 * 0.9 * 0.9 * 0.9 * 0.33 = approximately 21%. Almost 80% of the energy is lost as heat rejected to the atmosphere.

Suppose though that instead of expanding against a turbine, the hot strontium gas is used to heat a wet solution of concentrated sulfuric acid, again with all materials science issues addressed. At the sulfuric acid reaches about 1300K, it will decompose into oxygen, sulfur dioxide and steam, all gaseous and all hot. Suppose that these gases are cooled in a tubular heat exchanger cooled by compressed carbon dioxide, which is heated during this process to around 900 K, expands against a turbine and cooled by heating pressured water (steam) at 500 K which then expands against a turbine and is brought to 300K, "room temperature." Using the handy Carnot calculator above, the carbon dioxide (Brayton) heat engine has an efficiency limit of 44%. The steam heat engine has an efficiency limit of 40%. Let us return to the gases generated by the decomposition of sulfuric acid though. There are means to separate the oxygen and the sulfur dioxide, and let's consider that the sulfur dioxide is pumped into a mixture of steam and iodine. Under these conditions an exothermic reaction takes place, the Bunsen reaction, whereupon the sulfur dioxide is reoxidized to sulfuric acid with the decomposition of the steam to give hydrogen iodine gas while regenerating sulfuric acid. If this mixture is cooled to condense the sulfuric acid the hydrogen iodide gas can be heated (to about 600 K) to generate hydrogen gas while regenerating iodine. The series of chemical reactions described are known as the sulfur iodine cycle, which sums to give the decomposition of water into hydrogen and oxygen and which has a practical thermodynamic efficiency of between 55 and 65%, a theoretical efficiency of 71%. Let's assume the worst case, 55% efficiency, meaning 45% is lost. But the carbon dioxide heat engine recovers, let's say, in a real engine, something like 40% of this loss, or 18% of the original energy, reducing the overall loss to 45% - 18% = 27%. If the real steam cycle operates at 33%, then 33% * 27% = 9%. Thus the overall efficiency of the entire process is 9% + 18% + 55% = 82%, with some of the 55% represented as directly stored chemical energy produced without the use of mechanical and electrical intermediates.

But 27% of the original heat energy is now in the form of mechanical energy, spinning turbines. The simplest thing to do with this mechanical energy, perhaps not the most efficient thing to do with it, is to generate electricity. It is important to contrast the hydrogen produced in this case with the lithium in a chemical battery. The hydrogen can be shipped anywhere, and be used in any way. Indeed, if the hydrogen is used to reduce carbon dioxide to produce the wonder fuel dimethyl ether, the reaction will be exothermic meaning that addition energy can be extracted using a turbine whenever demand justifies doing so. The dimethyl ether can be shipped anywhere, and used, at will to replace all the uses of dangerous natural gas, all the uses of dangerous LPG gas, all the uses of dangerous diesel fuel and also function as a refrigerant/heat exchange medium.

Let's leave aside the sloppiness of this crude, but illustrative, rhetoric and cut to the environmental and economic chase.

Of course, this process, were it to be both economically and environmentally sustainable, would be required to be continuous, meaning that the value of the two heat engine based energy recovery cycles would also be continuous. We should assume that the bad, but popular, idea of pursuing so called "renewable energy" may continue for a while, until both the futility and the unsustainability of this pursuit becomes obvious, as it must - we are seeing the first shots across the bow already. Therefore we must also assume that there will be portions of the day over the next several decades when electricity will be of extremely low value or even worthless, at least until all this so call "renewable energy" junk has begun to rot, requiring future generations to clean up the mess.

If electricity is worthless during a putative sulfur iodine process which produces electricity as a side product, what might be done with it in such a way as to not lose money?

This long, boring, convoluted argument brings me to the paper at discussed at the outset. Why not use this electricity to recover valuable materials from seawater while generating fresh water - drinking water, irrigation water, as a side product during the times electricity is next to worthless or totally worthless, while supplying it to the grid, carbon free, when demand and supply make it valuable? In considering this, one should recognize that capacitive desalination/metal recovery is but one approach to valorizing worthless electricity produced at times that there is no need for it on the grid, for example on windy sunny days in the proposed but idiotic "renewable energy" nirvana that has not come, is not here, and, as a practical consideration, will not come.

From the introduction of the paper cited at the outset:

Adsorptive membranes are an emerging class of materials that have been shown to exhibit improved performance in numerous separations when compared with conventional membranes, including for water purification (4, 9–13). However, improvements are needed in the capacities, selectivities, and regenerabilities achievable with these materials to enable their wide-scale use, which is also currently hindered by the limited structural and chemical tunability of most adsorptive membranes (4). We therefore sought to develop a highly modular adsorptive membrane platform for use in multifunctional water-purification applications, which is based on the incorporation of porous aromatic frameworks (PAFs) into ion exchange membranes. Built of organic nodes and aromatic linkers, PAFs have high-porosity diamondoid structures with pore morphologies and chemical affinities that can be tuned through the choice of node and linker (Fig. 1, A and B) (14, 15).

The authors then list the elements that might be recovered from seawater, but in current membrane desalination practice are returned to it, two of which, mercury and lead, are toxic elements added to the ocean by anthropomorphic activities, chiefly the combustion of the dangerous fossil fuel coal, but also from other sources, for example mercury used as ballast in sunken submarines and lead weights. Others include uranium, which is a natural constituent of seawater and has a long history of consideration as a source of this inexhaustible element, as well as copper, gold, boron, neodymium and iron.

The graphics in this paper, which I will produce shortly focus on mercury.

In 2014, it was estimated that the concentration of mercury in ocean water has tripled since the preindustrial times, with most of the mercury concentrated in surface waters: Lamborg, C., Hammerschmidt, C., Bowman, K. et al. A global ocean inventory of anthropogenic mercury based on water column measurements. Nature 512, 65–68 (2014).

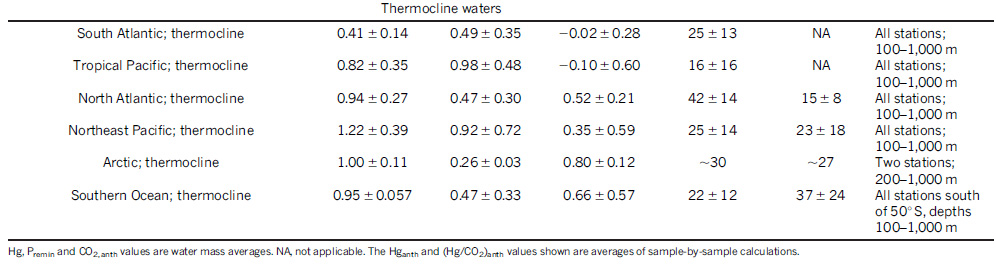

Here is a table from that paper, suggesting concentration gradients in various places in the oceans:

The "thermocline" here can be taken as surface waters.

Since the atomic weight of mercury is 200.5, 1 pmol/kg out to 0.2 ng/ml or roughly, 0.2 ppm in some places. The USA EPA drinking water standard is ten times higher, 2 ppm. Certainly there are regions in this country where that standard is exceeded. As mercury is a neurotoxin which can cause, in excess, insanity, I sometimes muse to myself, more than half seriously, whether mercury and lead play a role in the rise of the Magats in this country. (How else can one explain the worship of a cheap white trash carny barker with poor taste, no ethics, and - there's something wrong with this - a history of handling - and losing - oodles of money?)

Certainly concentrations of mercury anywhere in the ocean at 1/10th drinking water standards are not a cause for comfort.

The Nature paper in the abstract, gives a wide ranging estimate of the amount of mercury humanity has added to the oceans, the upper estimate being around 1,300 million moles, which works out to around 260,000 tons.

The Science paper cited at the outset, in the supplementary materials, makes the following statements about a putative mercury capture membrane desalination plant:

We also estimate the amount of water that can potentially be treated in an ion-capture electrodialysis plant per regeneration cycle, based on a typical industrial electrodialysis design. Here, we make the same performance assumptions as described above and assume 20 wt% PAF-1-SH in sPSF membranes are implemented as the cation exchange membranes. While electrodialysis designs and sizes vary by plant (51-55), we assumed the following design parameters based on typical setups reported:

• 300 membrane stack pairs (i.e., 300 cation exchange membranes consisting of 20 wt% PAF-1-SH in sPSF) (52-54)

• 1-m2 active area per membrane (51, 52)

• 300-?m thickness for each membrane (52, 54, 55)

Based on this design, a total 20 wt% PAF-1-SH membrane volume of 90 L, and thus a total PAF-1-SH mass of 16.8 kg, is expected for such a plant. The PAF-1-SH mass was determined by assuming that the 20 wt% PAF-1-SH membranes have a density of 0.931 kg L?1. This density was determined as the volume-averaged density between bulk PAF-1-SH and sPSF (0.420 kg L?1 and 1.337 kg L?1, respectively; see Section 1.4.6), using the 44.3 vol% PAF-1-SH value determined for a 20 wt% PAF-1-SH membrane (table S2). With the PAF-1-SH performance assumptions previously discussed, we estimate that the following volumes of water can be treated in an ion-capture electrodialysis plant before regeneration is required:

• ~3,000,000 L of water treated for a feed source containing 5 ppm Hg2+

• ~15,000,000 L of water treated for a feed source containing 1 ppm Hg2+

• ~150,000,000 L of water treated for a feed source containing 0.1 ppm Hg2+

The membranes are subject to regeneration by washing with HCl - which is readily available from seawater with other capacitive processes, for example, Heather Wilauer's process for recovering carbon dioxide from seawater to make jet fuel: Feasibility of CO2 Extraction from Seawater and Simultaneous Hydrogen Gas Generation Using a Novel and Robust Electrolytic Cation Exchange Module Based on Continuous Electrodeionization Technology (Heather D. Willauer, Felice DiMascio, Dennis R. Hardy, and Frederick W. Williams Industrial & Engineering Chemistry Research 2014 53 (31), 12192-12200) This acid can be neutralized by sodium carbonate, perhaps generated in the same process by treatment with air.

In the United States, large scale water demand is often stated using the somewhat unfortunate unit for volume, the "acre-foot." An "acre-foot" is equal to 811,030 liters of water.

The capacity of the resins is stated to be around 0.4 g Hg/g membrane, meaning that to process one cycle before requiring regeneration of the membranes, 16.8 kg of membrane would capture 6 kg of mercury.

The State of California consumes something around 35 million acre-feet of water per year or roughly 28 trillion liters.

Suppose some future generation, smarter than the one into which I was born, more appreciative of the planet than my generation of bourgeois consumers, decided to restore the environment of California, to do things like refill the depleting groundwater of the central valley, refill Owens Lake, open the dams on the Sacramento River to let the Salmon run, blow up the Hetch Hetchy dam and recreate that magnificent Hetch Hetchy Canyon, restore the Colorado River Delta... This is perhaps, a stupid dream, a big dream, a wild dream, but at the end of one's life, a dream worth having, speaking only for myself.

Suppose that California also decided to remove the greasy bird grinding wind turbines for desert and chaparral wilderness, remove the mirrors, the heat transfer fluids etc, suppose California decided to ban the use of dangerous natural gas and all fossil fuels.

...a dream worth having, it's called "hope," Pandora's hope, perhaps, but hope...

If by regulation, all of California's water was obtained from the sea, 28 trillion liters of it, perhaps even more, California could, in theory recover about 1,270 tons of mercury per year from the sea.

Just saying...

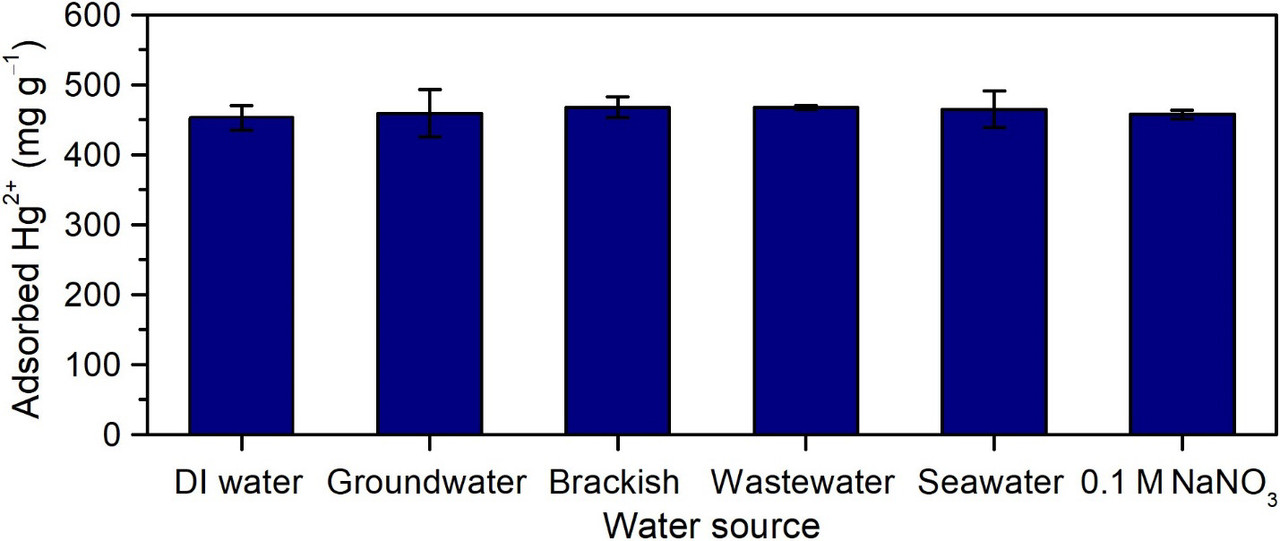

Some graphics from the body of the paper:

The caption:

(A and B) Tunable composite membranes were prepared by embedding PAFs with selective ion binding sites into cation exchange polymer matrices. (C) We demonstrate the use of these adsorptive membranes in an electrodialysis-based process for the selective capture of target cations (right-hand side) from water and simultaneous desalination. Water splitting occurs at both electrodes to maintain electroneutrality. (D and E) PAF-embedded membranes are defect-free and exhibit optical transparency and high flexibility. (F) Cross-sectional scanning electron micrographs (expanded view in inset) revealed high PAF dispersibility and strong, favorable interactions between the PAF and polymer matrix

The caption:

(A and B) Composite membranes exhibit increasing water uptake, swelling resistance, and glass transition temperature (Tg) with increasing PAF-1-SH loading. (C) Comparison of equilibrium Hg2+ uptake in neat sPSF and sPSF with 20 wt % PAF-1-SH. Solid lines represent fits with a Langmuir model. Mercury ion uptake in the composite membrane closely approaches the predicted saturation uptake (329 mg/g), assuming all binding sites in the PAF particles are accessible. (D) Equilibrium uptake of Hg2+ in neat sPSF and sPSF with 20 wt % PAF-1-SH exposed to deionized (DI) water and various synthetic water samples with 100 ppm added Hg2+. (E) Mercury ion uptake in 20 wt % PAF-1-SH membranes as a function of cycle number. Minimal decrease in Hg2+ uptake occurs over 10 cycles. The initial Hg2+ concentration was 100 ppm for each cycle, and all Hg2+ captured in each cycle was recovered by using HCl and NaNO3. Error bars denote ±1 SD around the mean from at least three separate measurements.

The caption:

(A to C) Results from IC-ED of synthetic (A) groundwater, (B) brackish water, and (C) industrial wastewater containing 5 ppm Hg2+ by using 20 wt % PAF-1-SH in sPSF (applied voltage, ?4 V versus Ag/AgCl). All Hg2+ was selectively captured from the feeds (open circles) without detectable permeation into the receiving solutions (solid circles). (Insets) All other cations were transported across the membranes to desalinate the feeds. The long duration of the IC-ED tests is an artifact of the experimental setup rather than the materials or IC-ED method (section 2.2 of the supplementary materials). (D) Breakthrough data for IC-ED using sPSF embedded with 10 or 20 wt % PAF-1-SH. Receiving Hg2+ concentrations are plotted against the amount of Hg2+ captured at different time intervals (in milligrams per gram of PAF-1-SH in each composite membrane). The predicted capacity (gray dotted line) corresponds to the Hg2+ uptake achieved by using PAF-1-SH powder under analogous testing conditions (section 1.10 of the supplementary materials). (Inset) Concentration of Hg2+ in the receiving solutions for IC-ED processes using neat sPSF (diamonds) and sPSF with 10 wt % PAF-1-SH (squares) and 20 wt % PAF-1-SH (circles), plotted versus time t normalized by the breakthrough time for the 20 wt % PAF-1-SH composite membrane, t0. Mean values determined from two replicate experiments are shown. Initial feed, 100 ppm Hg2+ in 0.1 M NaNO3; applied voltage, ?2 V versus Ag/AgCl.

The caption:

(A and B) Cu2+- (A) and Fe3+-capture (B) electrodialysis (applied voltages, ?2 and ?1.5 V versus Ag/AgCl, respectively) using composite membranes with 20 wt % PAF-1-SMe and PAF-1-ET in sPSF, respectively. HEPES buffer (0.1 M) was used as the source water in each solution to supply competing ions and maintain constant pH. The insets show the successful transport of all competing cations across the membrane to desalinate the feed. (C) B(OH)3-capture diffusion dialysis of groundwater containing 4.5 ppm boron using composite membranes with 20 wt % PAF-1-NMDG in sPSF (no applied voltage). The inset shows results by using neat sPSF membranes for comparison. Open and solid symbols denote feed and receiving concentrations, respectively. Each plot point represents the mean value determined from two replicate experiments. Gray dotted lines indicate recommended maximum contaminant limits imposed by the US Environmental Protection Agency (EPA) for Cu2+ (29), the EPA and World Health Organization for Fe3+ (29, 30), and agricultural restrictions for sensitive crops for B(OH)3 (31).

The impressive selectivity from the Supplementary Material:

The caption:

(Top) Single-component equilibrium uptake of Hg2+ and various common waterborne ions by PAF-1-SH powder (initial concentrations: 0.5 mM). (Bottom) Equilibrium adsorption of Hg2+ by PAF-1-SH powder in different realistic water solutions with 100 ppm added Hg2+. Uptake of Hg2+ by PAF-1-SH from a solution of only Hg2+ only (100 ppm) in DI water is also shown for comparison. No loss in Hg2+ capacity occurs in the presence of various abundant competing ions in each solution, indicating exceptional multicomponent selectivity of PAF-1-SH for Hg2+. Reported values and error bars in each figure represent the mean and standard deviation, respectively, obtained from measurements on at least three different samples.

Be safe. Be well. Be vaccinated. Have a pleasant Sunday.

My wife avoided discussing the attire.

When we met, there was a woman on our campus who dressed that way, thus generating quite a bit of interest among science dorks, including frankly, me.

I married that woman.

My wife and I are having a disagreement.

On the day of my oldest son's second Pfizer...

...I say the lyrics are "My Corona."

She says it's about some woman with a name that sounds like Corona, She-Rona, Ricearona, something like that. Who would make a song up about such a weird name, anyway?

To me, the woman pictured in the tank top is wearing it to prep for her shot.

What say ye?

Profile Information

Gender: MaleCurrent location: New Jersey

Member since: 2002

Number of posts: 33,564